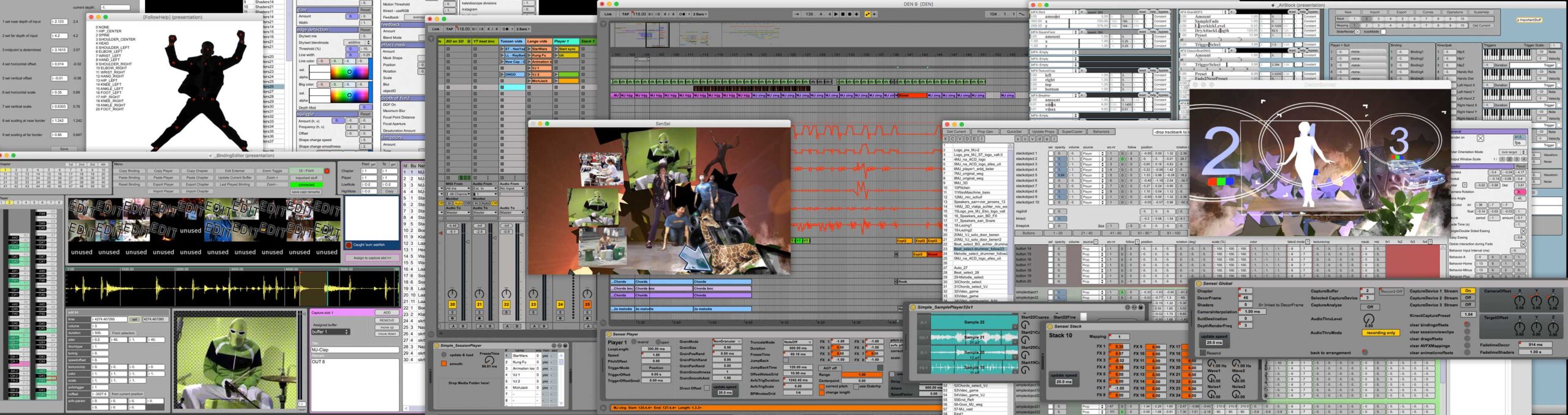

In 2010 and 2011, I developed SenSei with EboStudio. SenSei turns Ableton Live (popular music software) into an audio-visual instrument. The aim of my technical development is to create the ultimate audio-visual instrument. To make it possible to work with visuals and music at the same time, in one unified creative process. Ideal for audio-visual artists like me. It is the successor of SenS IV and predecessor of EboSuite.

About

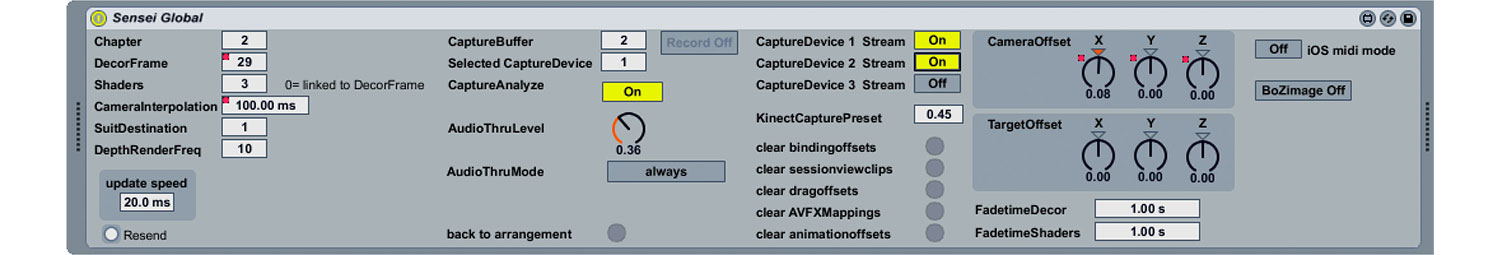

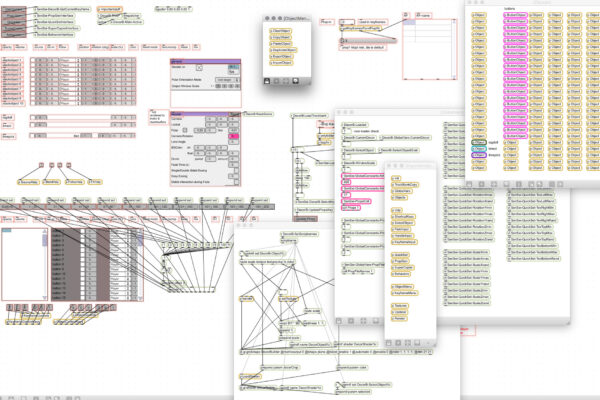

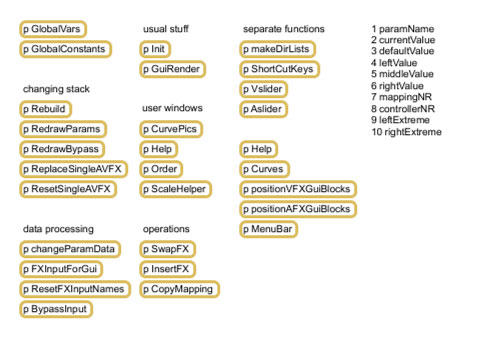

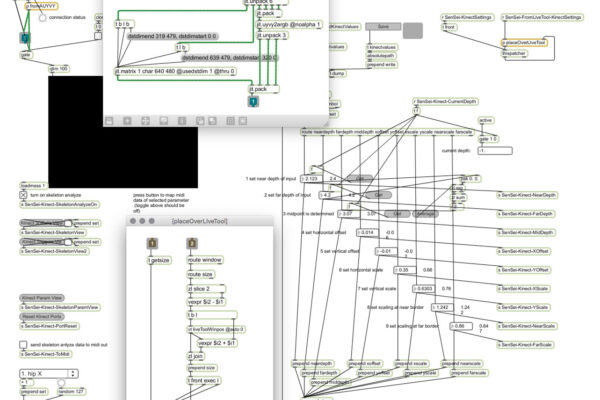

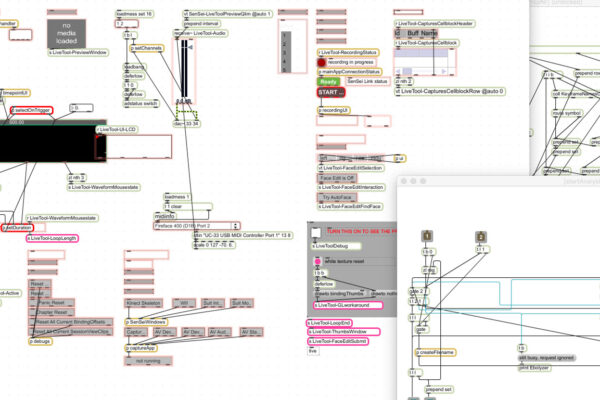

SenSei consists of a set of ‘Max for Live’ plug-ins (see below) that control the SenSei application (written in Max) and many editors to edit different aspects of a SenSei project. The creative features of SenSei remained mostly the same as SenS IV, yet more user-friendly, reliable and better integrated into music software (Ableton Live).

SenSei also added two interesting innovations: Augmented Stage and support for WikiVideo and HyperVideo. In 2014, another interesting innovation was added: the ability to export Interactive Tracks.

Max for Live

In 2009 Ableton Live released ‘Max for Live’, a platform to build your own instrument and effect plug-ins for Ableton Live with the Max programming language. Seamless integration into music software is very important to create the optimal audio-visual instrument, so ‘Max for Live’ was exactly what I was looking for! I had been using Max since 1999 and all my audio-visual instruments were build in Max, so this was a very important development for me.

In 2010, I invested heavily in researching how to integrate SenS as seamless as possible into Ableton Live using the new possibilities of Max for Live. According to this investigation Max for Live seemed not reliable enough for an optimal integration unfortunately.

The integration of SenSei into Ableton Live is therefore not as seamless as hoped for. Disappointing, but we made a much better integration into Live later, with the DVJ Mixer 3.0 in 2013 and EboSuite.

Main features

Tight integration into Ableton Live

One of the most important goals with the development of SenSei was to integrate it as seamless as possible into Ableton Live using the new possibilities of Max for Live. We developed many Max for Live plug-ins to control SenSei’s creative features (read more about them below).

Flexible video playback

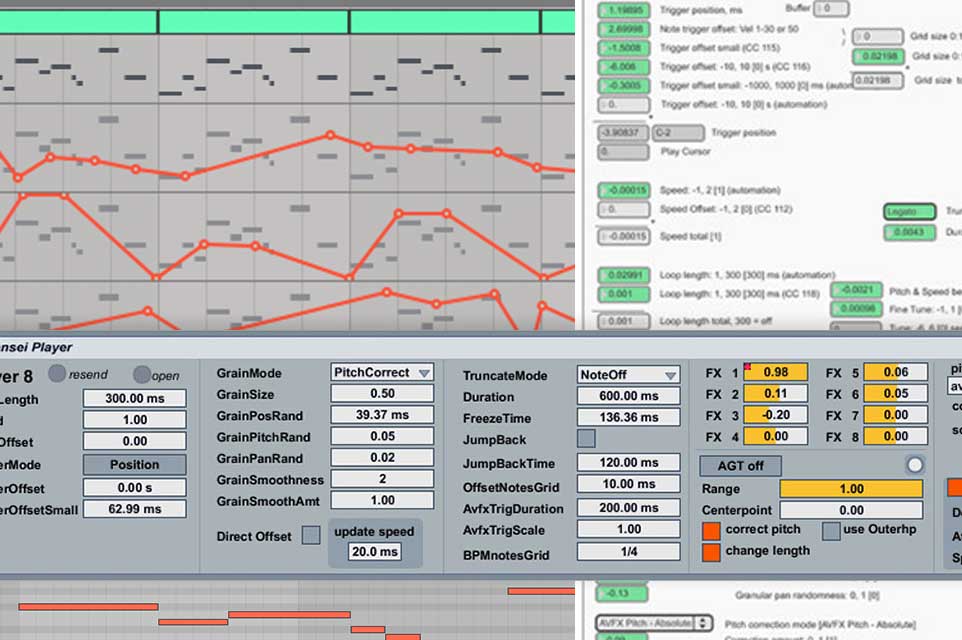

SenSei is an artistic instrument. Therefore maximizing creative, expressive control plays an important part in the SenSei concepts. For video playback we created many ways to manipulate the timing and pitch of videos. Cutting, slicing, triggering, skrtZz-ing, scrubbing, stretching, pitching, speeding up/down can be done with track automation, MIDI controls, MIDI notes, analysers, video meta-data and live interfaces.

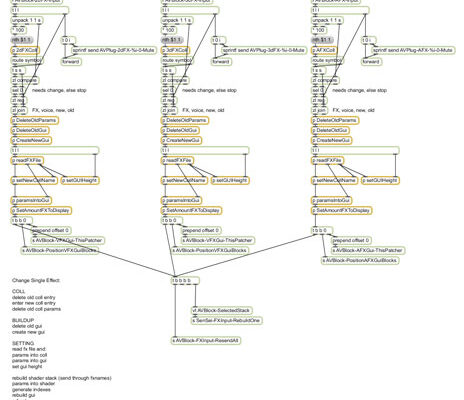

Audio-visual effects

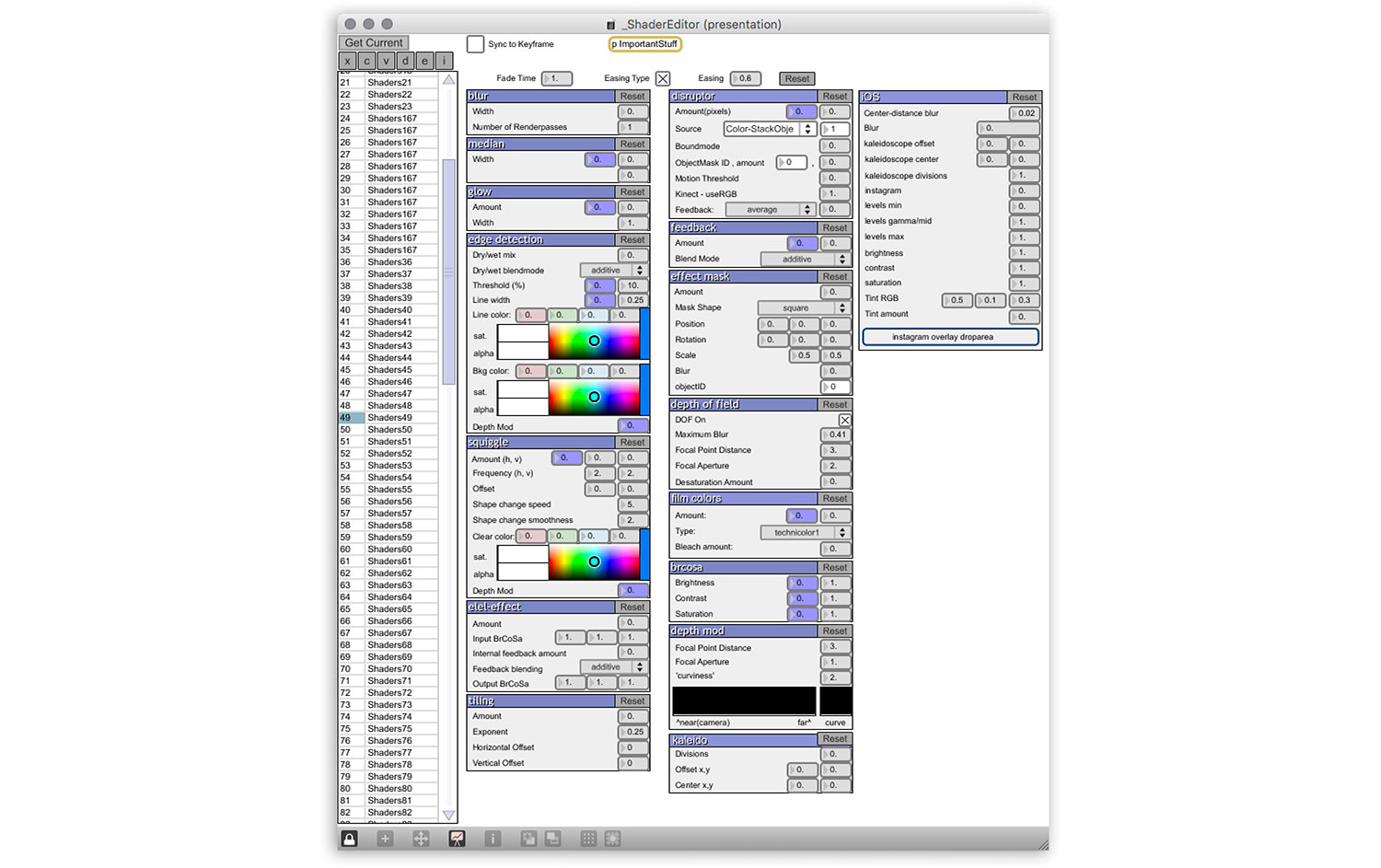

For SenS IV we developed the AVBlock Editor and many 3D visual effects and audio effects (blocks). Combining these blocks creates many interesting ways to manipulate sounds/visuals and to create completely new shapes and sounds. For SenSei we created many more ‘blocks’ and improved the AVBlock Editor. We also added many ‘Global Shaders‘. SenSei’s Global Shaders are used to enhance and manipulate the final video mix.

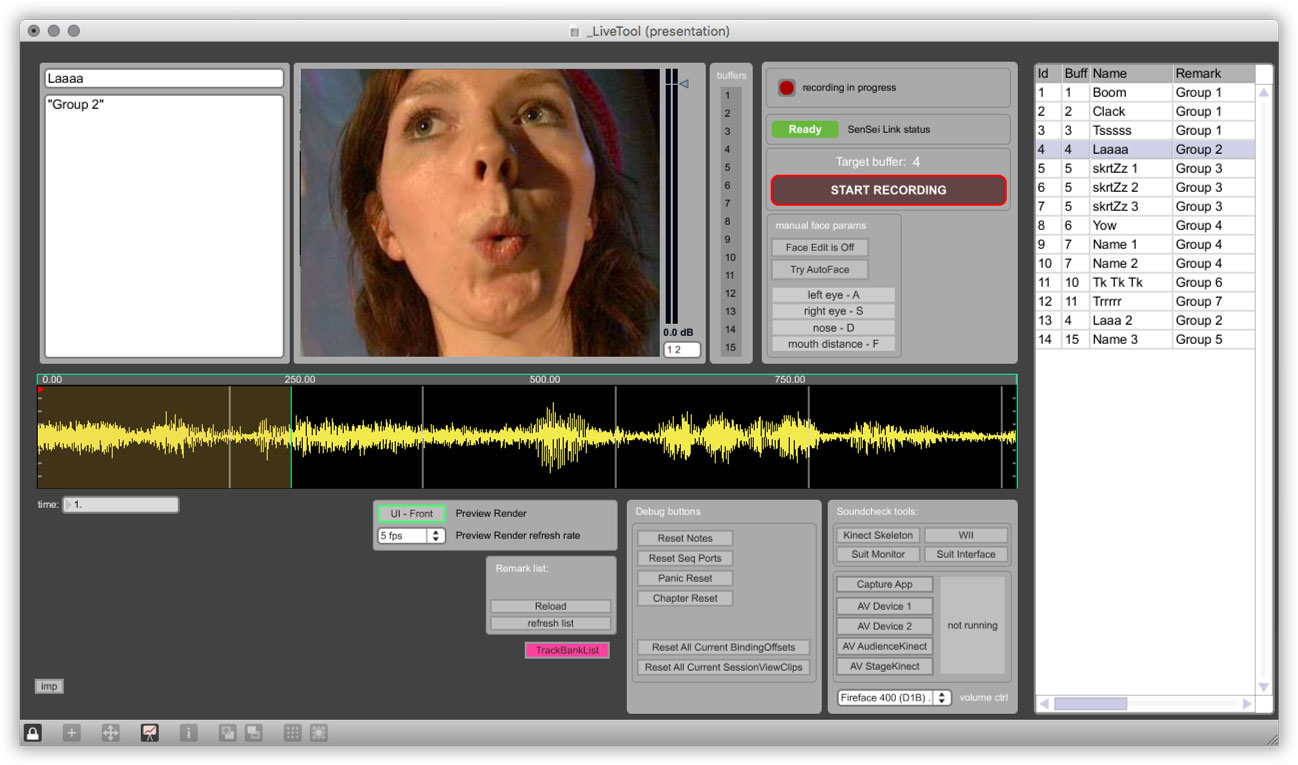

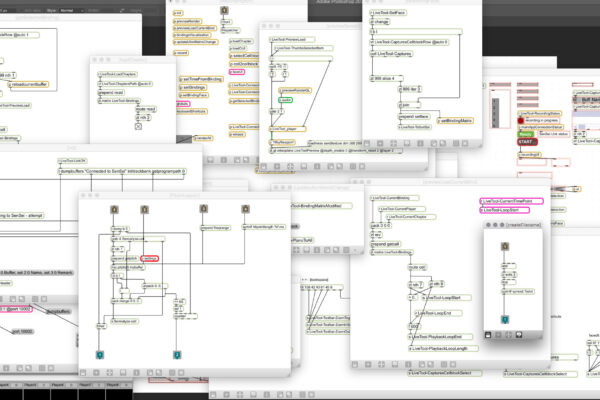

Live video sampling

I had a lot of success with my live video sample performances with SenS IV. Therefore I invested a lot to make live samping easier to do and more reliable (SenS IV crashed pretty often during live recording). We added a live sampling monitor to check the process on stage and we improved the audio-visual analyser (to cut and filter recordings automatically). We also made it much easier to work with templates live and to prepare for a performance. Read more about this concept on the live video sampling project page.

3D video mixing and real-time motion graphics

For SenSei we expanded SenS IV’s 3D engine to mix visuals in real time considerably. 255 graphics, video and (3D) camera streams can be combined live at the same time in a virtual 3D space with blend modes and effects. Designing 3D scenes is done with the ‘Decor Builder’. Layers can be arranged automatically to create complex structures. ‘Animations’ can be added to manipulate position, rotation, scale, effect en color for more dynamic scenes. Read more about this concept on the real-time motion graphics project page.

Augmented Stage

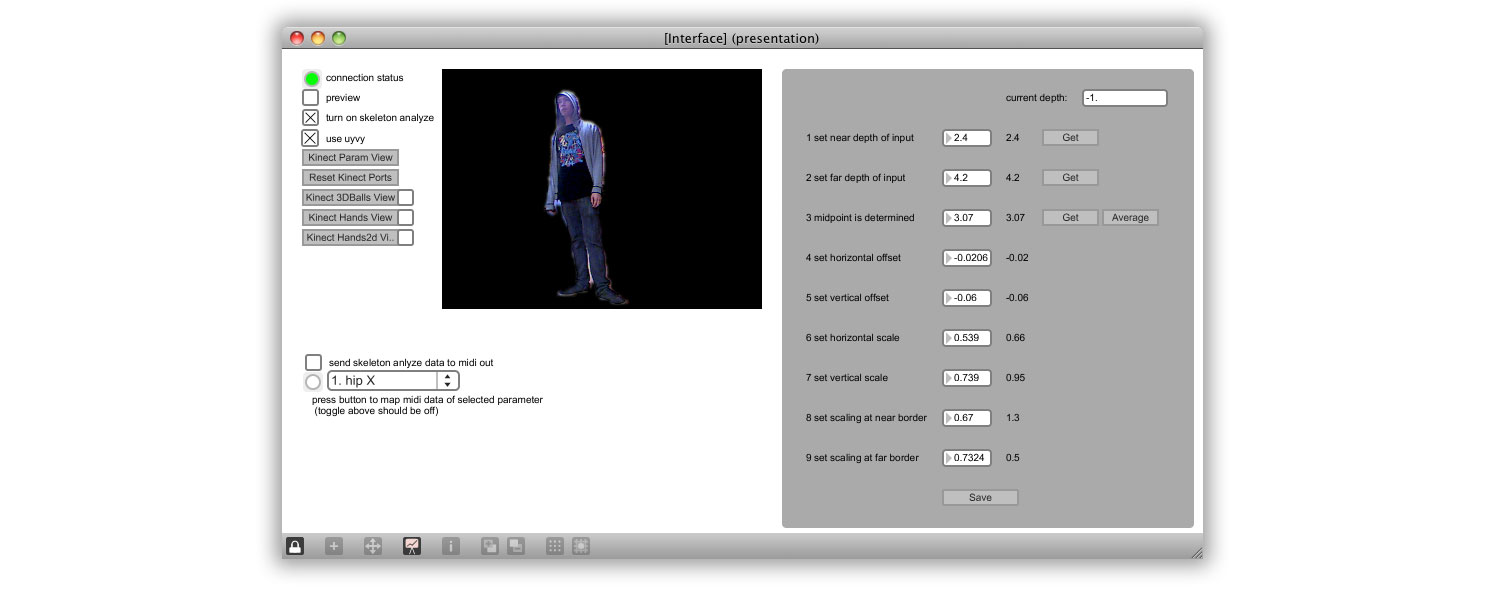

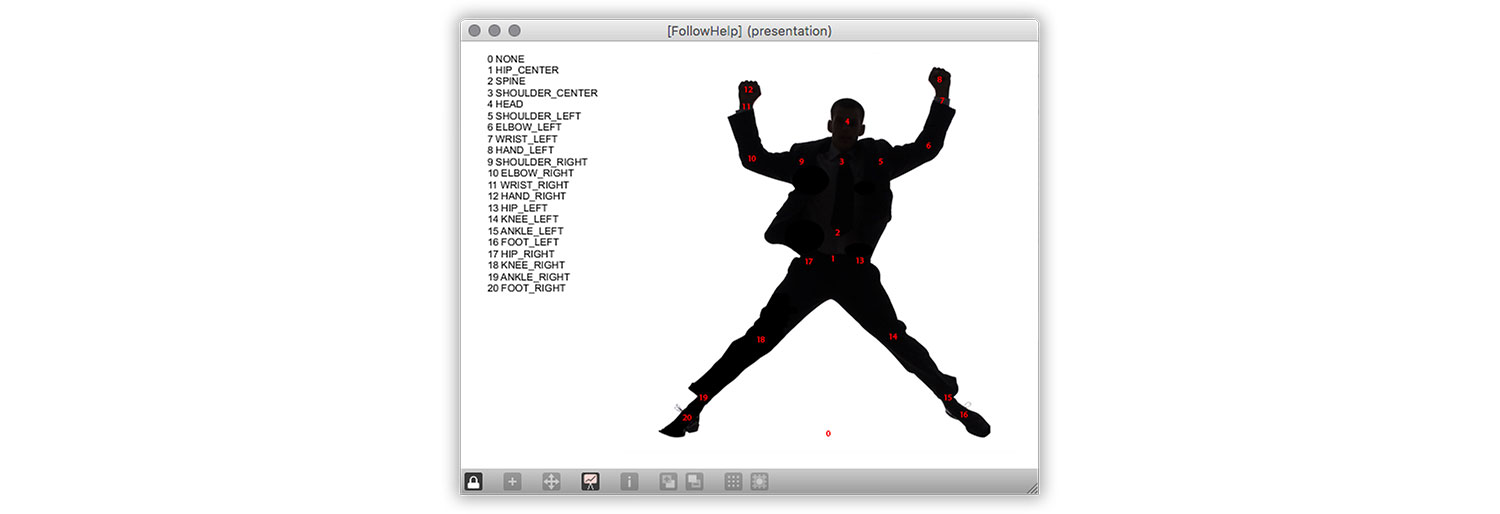

Using a 3D camera the performer can step into the visual mix and walk around in this artistic 3D space. The performer can touch all graphics and videos to trigger all kinds of actions, like start/stop videos, move graphics, control effects, play with characters, play a melody, start a recording etcetera. The audio-visual art is also the interface to control the show! The performer plays a part in the compositions live, and is no longer hidden behind his machines. Read more about this concept on the Augmented Stage project page.

WikiVideo and HyperVideo

For the WikiVideo project we added the ability to load videos directly from a database connected to YouTube. Through the WikiVideo website anybody could add videos from YouTube to the database. The WikiVideo application downloaded, analysed and prepared the videos for SenSei automatically. We added the abillity to add meta-data to videos using the Bindings Editor to make it easy to use many videos in a SenSei project. Videos could be published as HyperVideos. Read more about these concepts on the WikiVideo and Hypervideo project page.

SenSorSuit

I have been using a motion tracking suit (‘SenSorSuit‘) for my performances since 1999. This suit can also be used to control SenSei. I didn’t improve the SenSorSuit for SenSei, because I was focussed on the development of Augmented Stage.

Interactive Tracks

In 2014 we added the abillity to export a SenSei project as a template for the Interactive Tracks mobile app. Read more about this concept on the Interactive Tracks project page.

Projects

SenSei is used for many projects and performances, like LEFestival, Song vh Jaar, Haai Alarm, Steppenwolf, After Movie installation, ASML, Interactive Tracks, Interactive Narrow Casting, Augmented Stage, WikiVideo and HyperVideo.

SenSei application

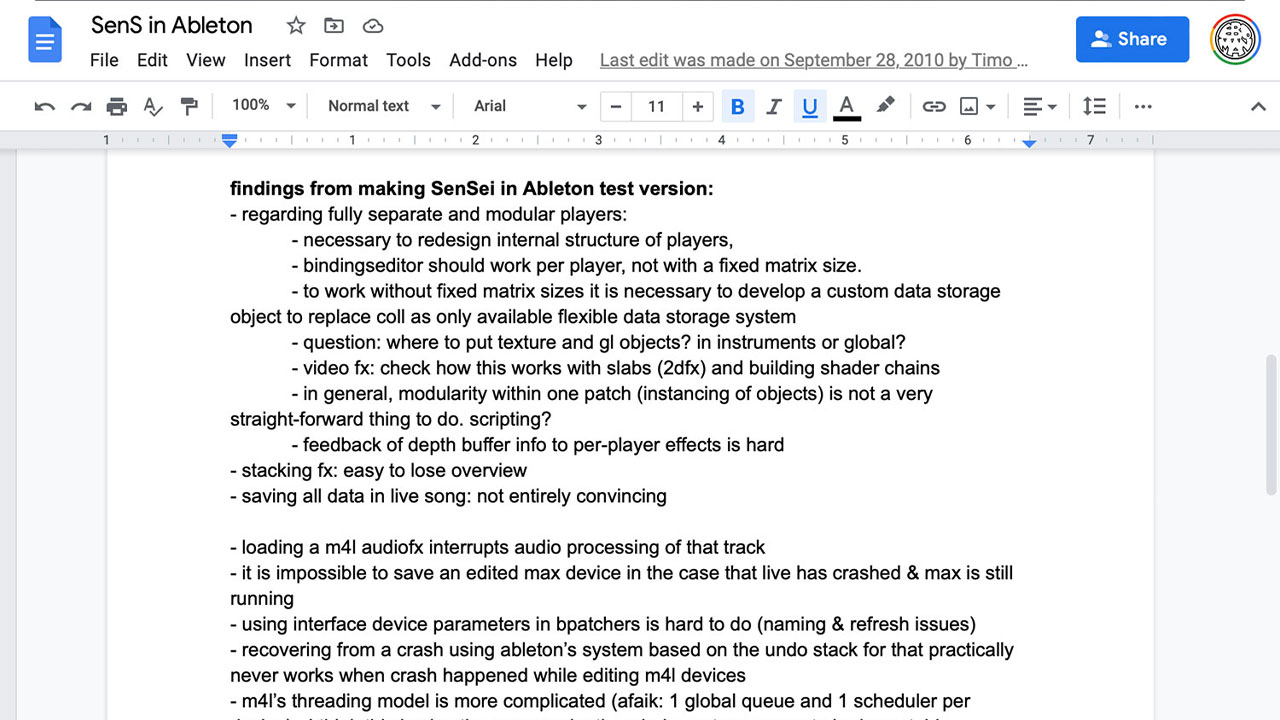

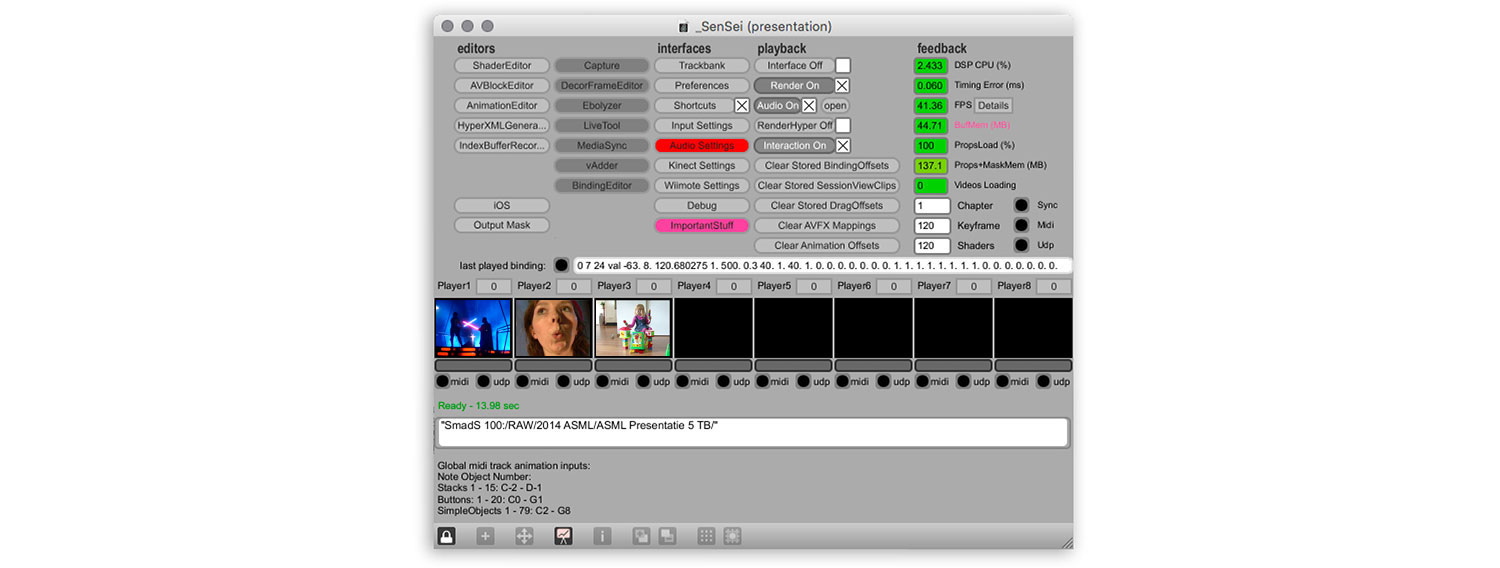

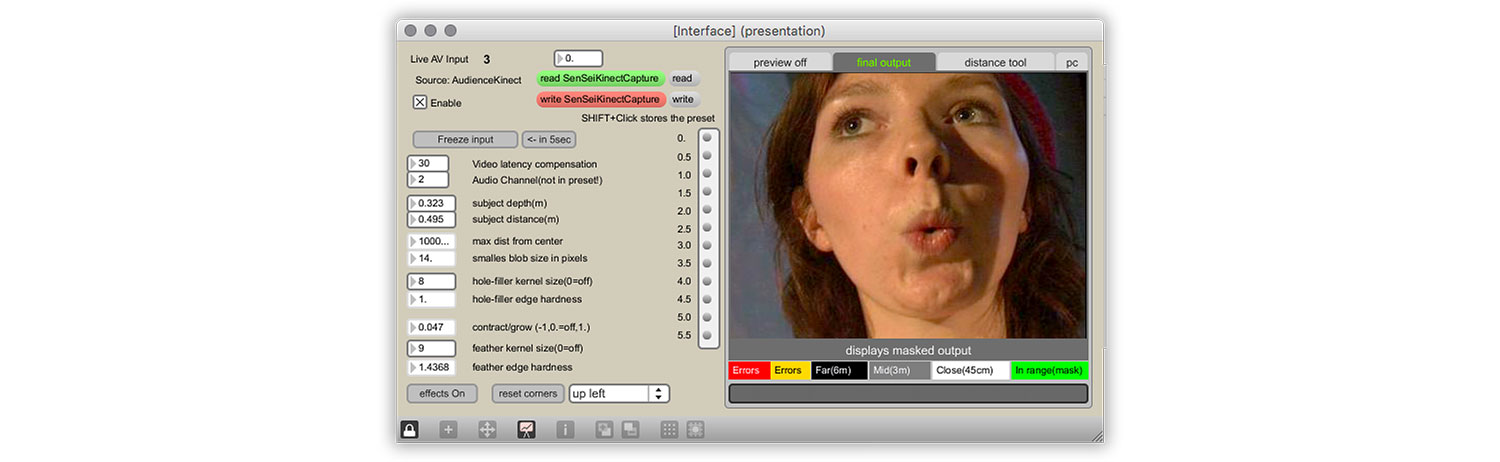

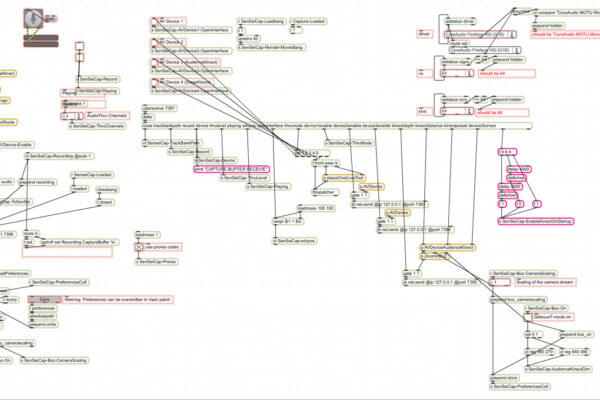

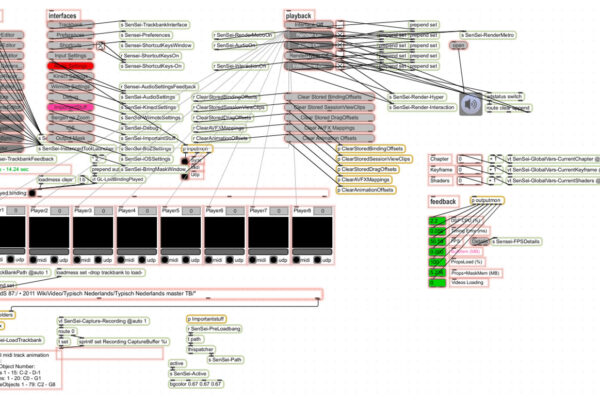

SenSei’s main application is the heart of SenSei. This is where all AV processing happens. The SenSei application runs in a separate instance from Ableton Live to optimise performance and reliability. The application is controlled from Ableton Live using SenSei Max for Live plug-ins. This creates an optimal workflow in which the process of creating music and visuals are merged into one, unified creative process.

File management and editing happens in separate editors. These editors are accessed with buttons in the main application’s interface. All processes can be monitored in the main application’s interface and the Function Monitor.

The Function Monitor is used to monitor the player’s audio visual playback. All incoming data from Ableton Live and how it is used is shown in this monitor. The Function Monitor is not used for editing, all editing happens in Ableton Live and the external editors.

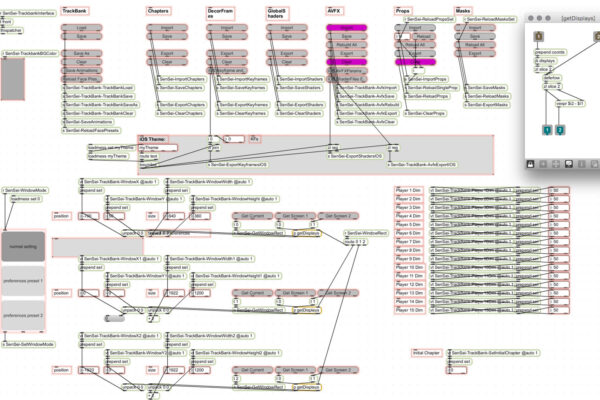

The Trackbank Editor is used to save and open a SenSei project. Different elements of a project can be stored and loaded separately. The Trackbank Editor is also used to export Interactive Tracks.

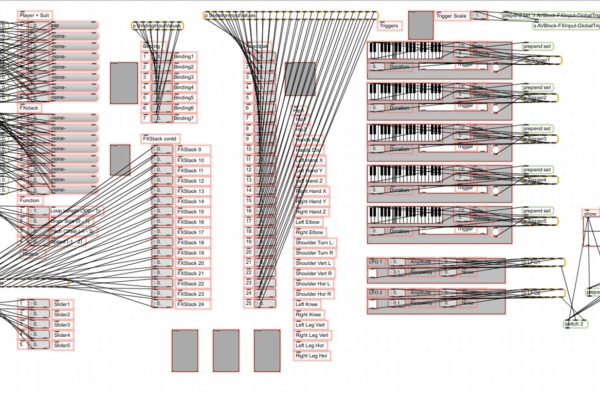

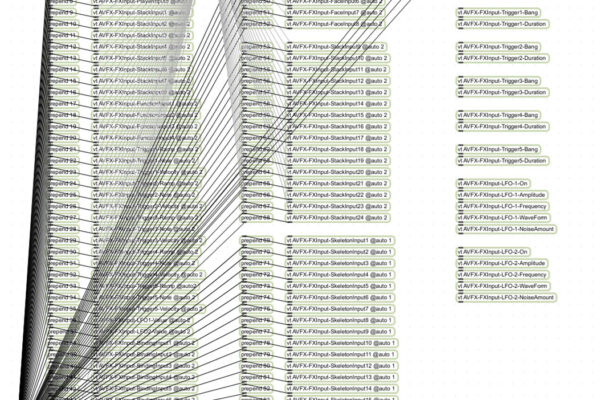

SenSei Ableton Live plug-ins

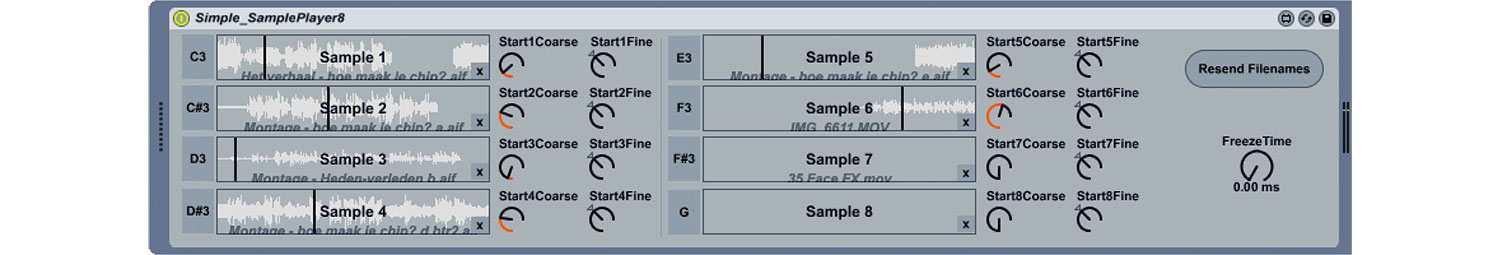

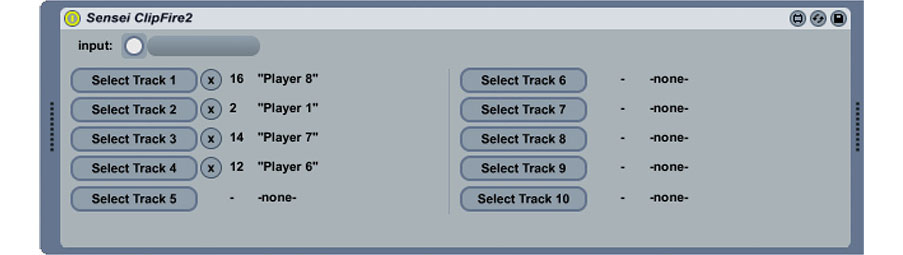

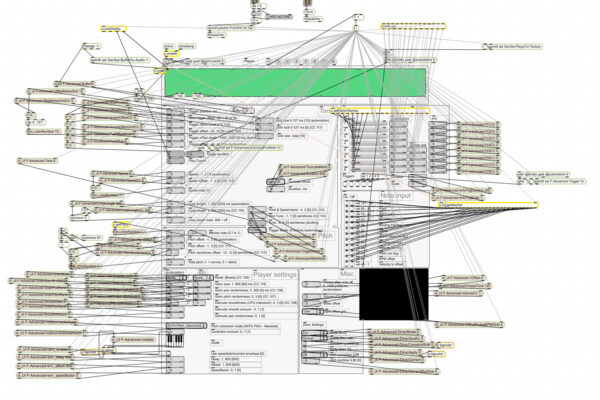

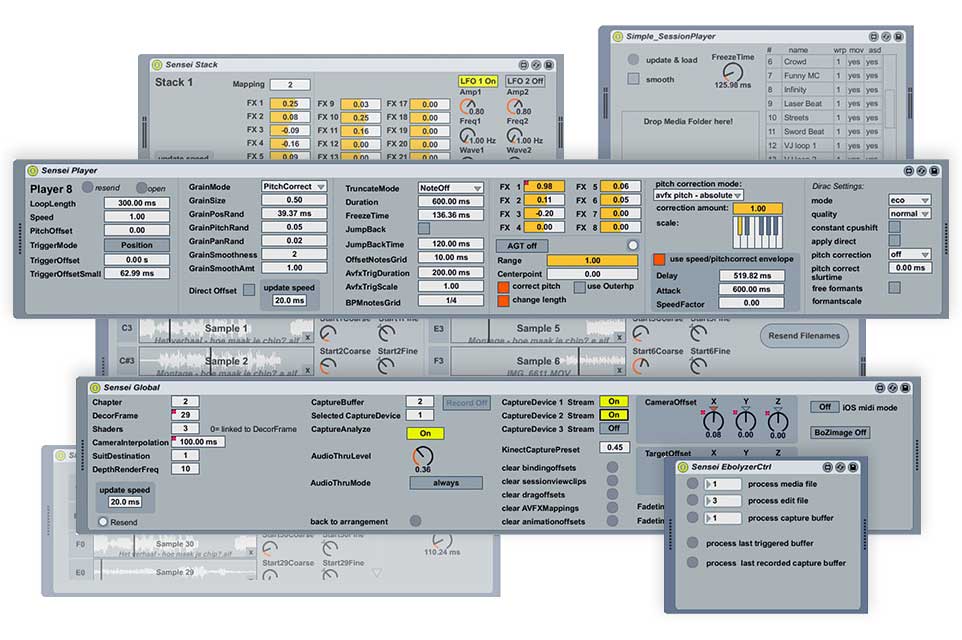

SenSei is equipped with 8 audio-visual players, controlled with the Player plug-in. Each player can hold up to 128 samples. The players offer many creative features to manipulate the timing and character of the AV samples, like flexible loop and sample start control, real-time granular synthesis and FX, pitch correction and much more. Samples are edited with the Bindings Editor.

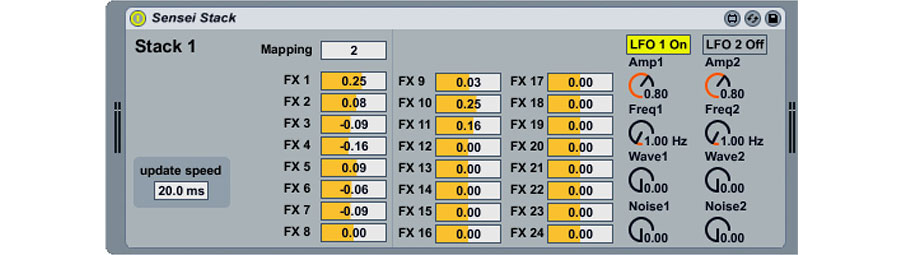

The Stack Plug-in controls one of the 10 available audio-visual effect stacks. AVFX stacks are edited with ‘AVBlock’ (see below). ‘Decor Frames’ are used to route the output of any AV player through one or more AVFX stacks. Decor Frames are edited with the Decor Frame Editor.

All audio and video playback of the SenSei players happens outside Ableton Live in the SenSei Max application. This caused many issues with latency, file loading and RAM space. The Simple Sampler Players are AV players that split AV playback. Audio is played in Ableton, so it is easier to sync with the rest of Ableton’s audio tracks and it uses Ableton’s RAM. The video plays back in the SenSei Max applicationa and is synced to the audio by the plug-in. Similar to EboSuite’s eSimpler plug-in. The Simple Sample Player 8 holds 8 samples.

The Session Player Plug-in adds video playback to Session View, like EboSuite’s eClips plug-in.

SenSei Editors

Sample editing

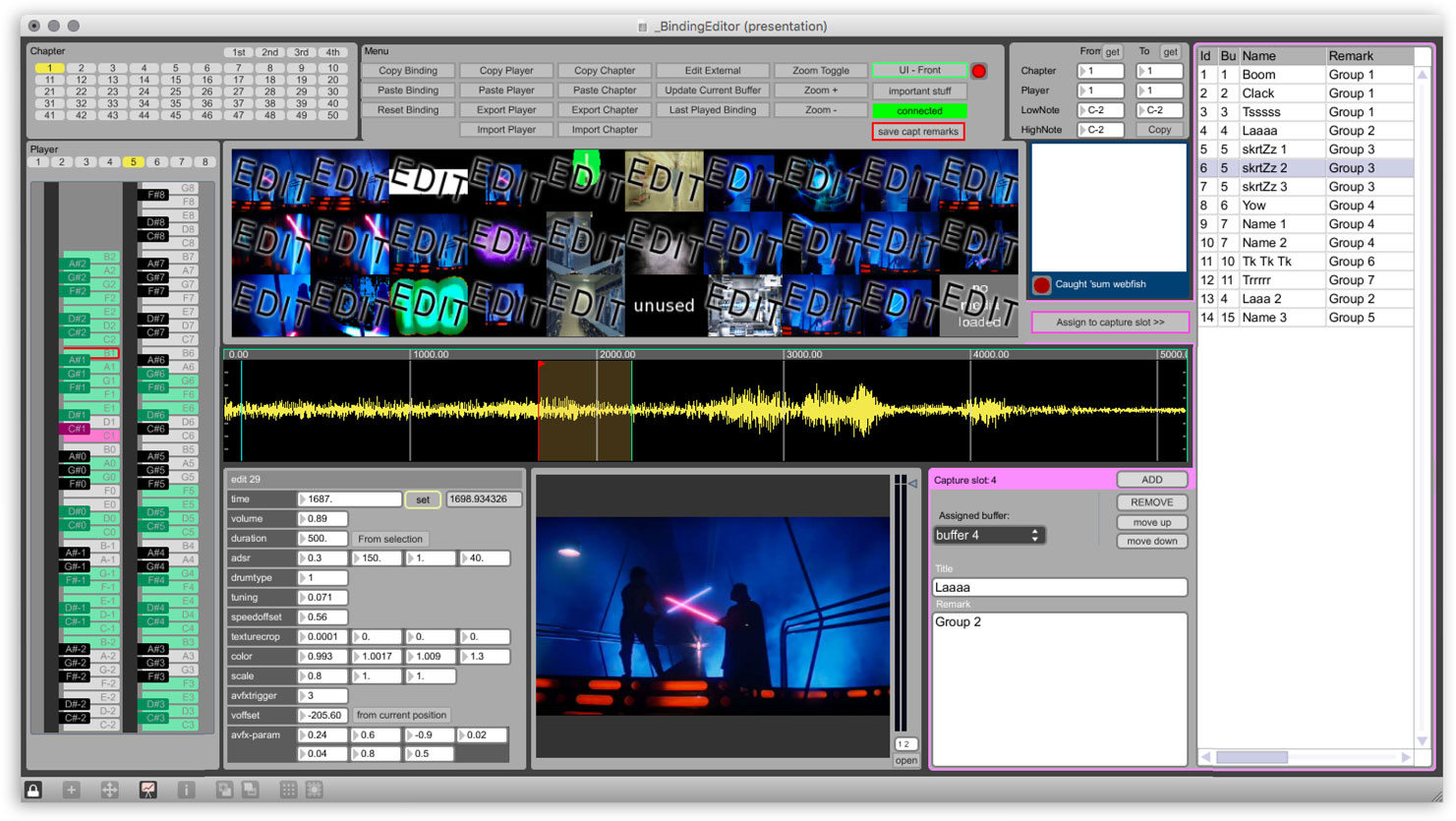

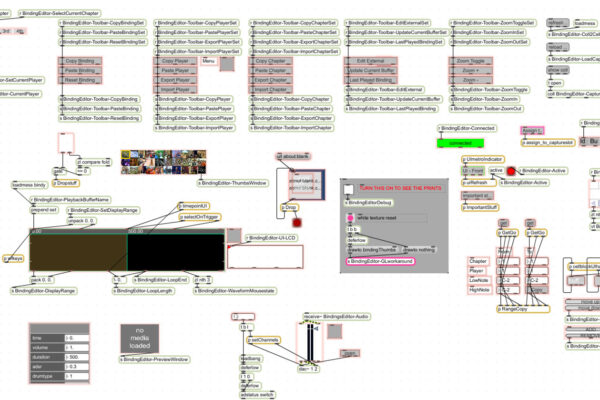

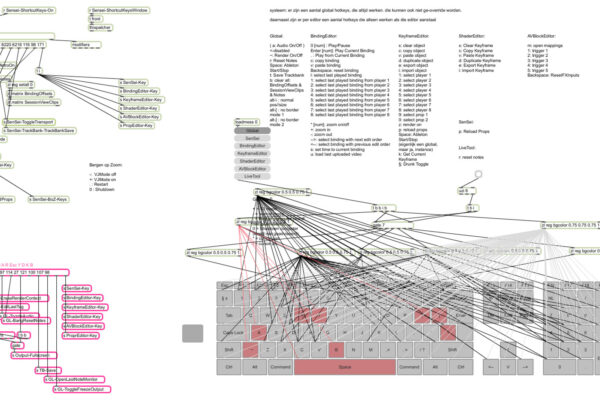

The Bindings Editor is used to create a sample bank (a ‘Chapter’) and assign these samples to the 8 AV players and map them to MIDI notes (a ‘Binding’). A chapter can hold up to 1500 samples. 128 samples can be assigned per player.

Extra sample control data can be stored in the sample ‘binding’, like volume, tuning, FX setting, video offset, scaling. These settings will then be combined with the control data sent from Ableton Live. The Bindings Editor is also used to create live sample slots and to map these slots to MIDI notes.

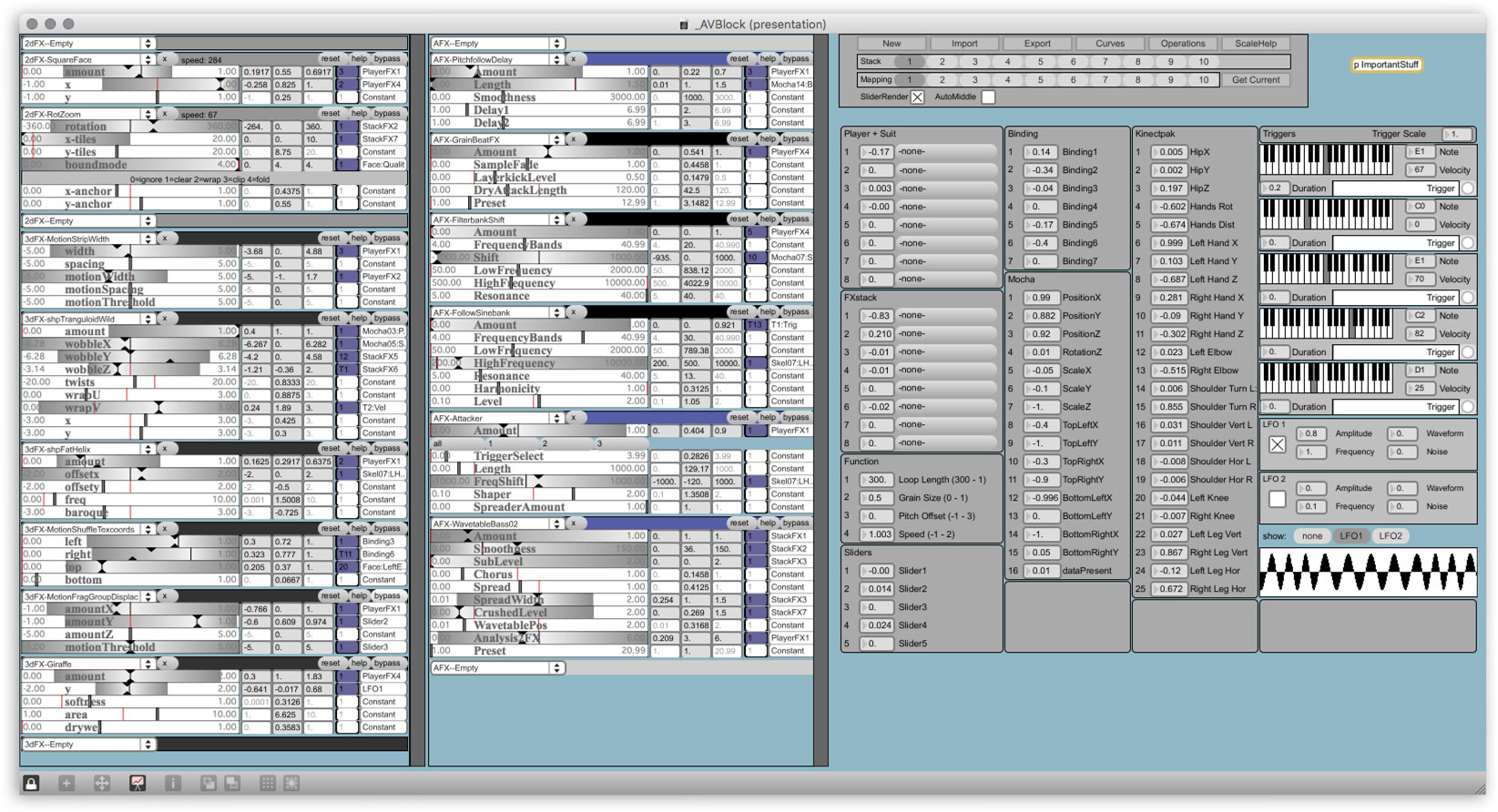

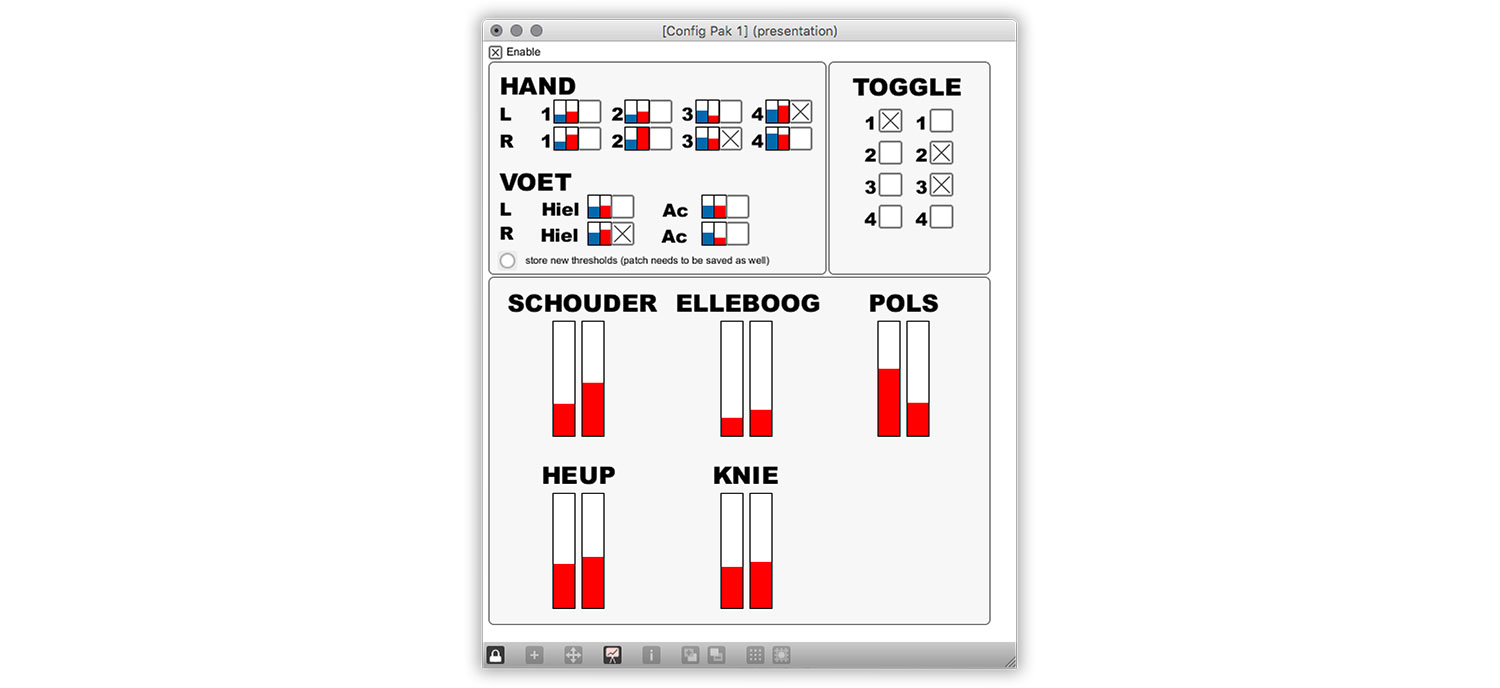

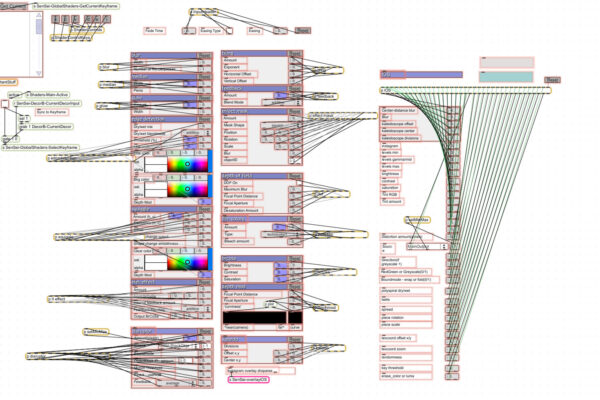

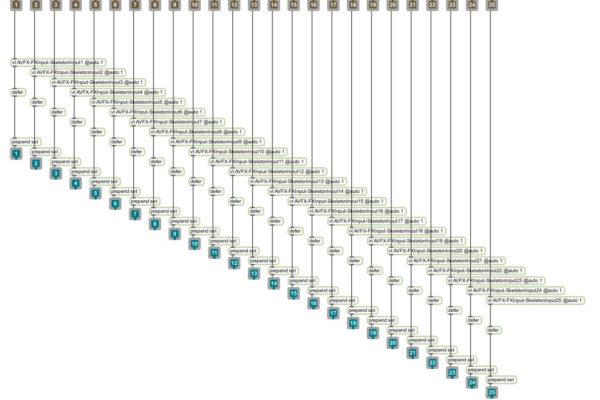

Audio-visual effects editors

AVBlock is used to create stacks of audio visual effect modules. These modules are custom made for SenS I, II, III, IV and SenSei. 125 3D video effects (vertex shaders), 132 audio effects and 19 2D video effects. These effects can be freely combined to create original audio-visual 3D effects or to create new visuals and sounds. SenSei supports 10 stacks simultaneously. Many inputs can be used to control 3DAVFX stacks, Ableton Live, the SenSorSuit, Augmented Stage, Mocha motion tracking data, LFO’s and bindings. Envelopes (‘Curves’) can be used for more precise mapping of parameters. Stacks are mixed with SenSei’s 3D video mixer and real-time motion graphics software.

With Nesa I created many 3D visual effects for AVblock, so called ‘vertex shaders’. In 2007 computer hardware was not capable yet of processing complex effects and play and mix videos at the same time, while running music software. A great aspect of vertex shaders is that they are not so demanding for the computer’s processor. Because vertex shaders are light it was possible to use many effects without the computer slowing down too much. An important downside though, was that these effects tend to look unpolished and raw. I like that aesthetic, but for commercial projects that was a problem. That is one of the reasons I invested a lot in the real-time motion graphics software of SenS/SenSei in 2007-2011.

With Timo Rozendal I created many audio effects for AVblock, because audio effects to morph sound into completely new sounds are rare. We made effects to generate bass sounds from voice samples, drum sounds from random sounds and many other creative effects.

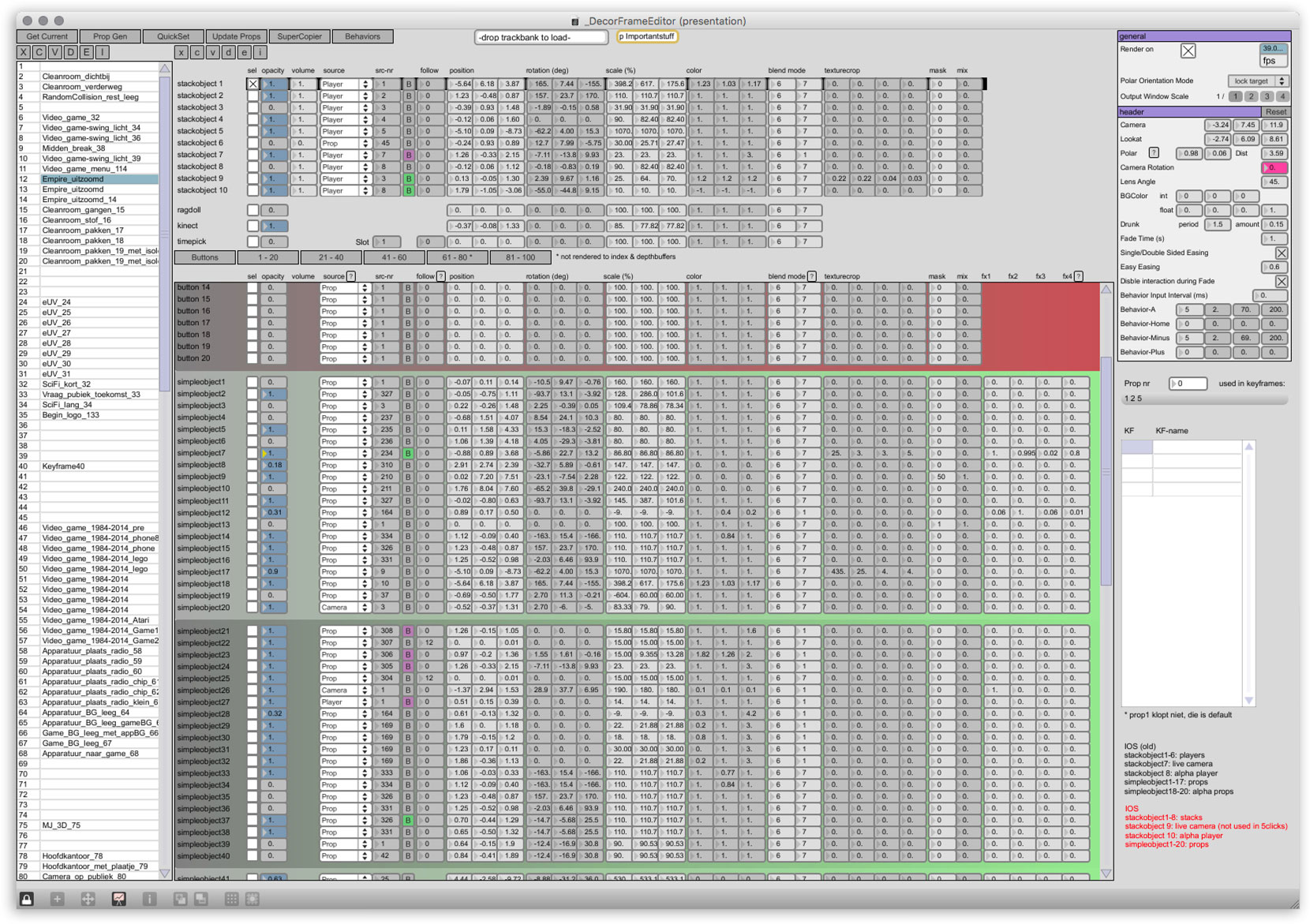

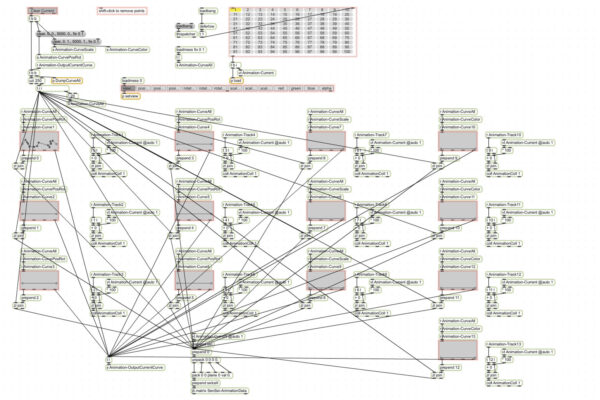

3D video mixing & interactive real-time motion graphics

The Decor Frame Editor is used to create and edit ‘Decor Frames’. Decor Frames are presets for the 3D video mixer and real-time motion graphics software. In a Decor Frame the source, position, scale, rotation, color, blend mode, mask, texture crop, and additional effects are stored for the 10 stack layers (‘stack objects’) and 255 additional layers (‘simple objects’) that are mixed together in a virtual 3D space. The settings for the virtual 3D camera (incl. depth of field) are also stored in the Decor Frame. Next to these layers there are 20 button objects (for Augmented Stage and the Senna interface), a layer for the Kinect 3D camera input, a layer for a SenSorSuit visualisation and for an experimental sample selector. The decor Editor has many sub-editors, like an Animation Editor to add extra motion to a layer, and sub-editors to make editing a complex Decor Frame easier.

The Decor Frame Behaviors Editor is a sub-editor to add interaction behaviors to Decor Frames. Behaviors are used by Augemented Stage and Senna to determine what happens when the user touches a layer (‘object’). Behaviors can be used to turn SenSei’s visual output into an interface for Ableton Live. Touching graphics or videos can tell Ableton to jump to another locator, start/stop clips, trigger samples, change mixer settings etc. Behaviors can also be used to trigger animations on a layer (making it fade out, bounce around, fall down like a leave, etc.) or to jump to another Decor Frame (great for making an interactive experience).

The Animation Editor is a sub-editor of the Decor Frame Editor. Animations are used to add motion to a layer (like jump up and down, wiggle, float in the wind etc.) when a layer is touched by the user (using Augmented Stage or Senna). MIDI notes on a MIDI track with a Global plug-in in Ableton Live can also trigger animations on a layer. The ASML performance is an example of this (See below): the moving loud speakers and flying bullits are graphics with an animation that is triggered by a MIDI note. In the performance many light effects are also triggered this way.

3D camera and SenSorSuit editors

The SenSorSuit can also be used to control SenSei. The SenSorSuit Monitor is used when it needs to be calibrated.

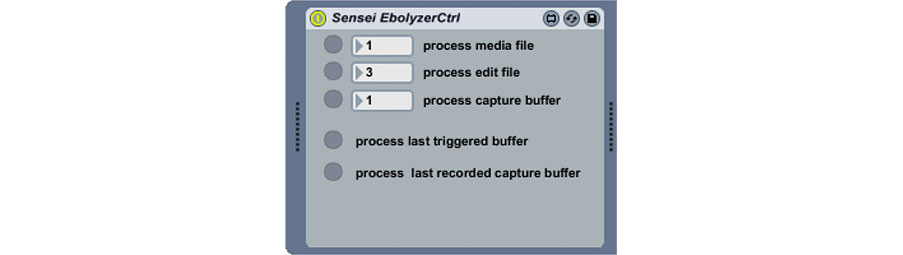

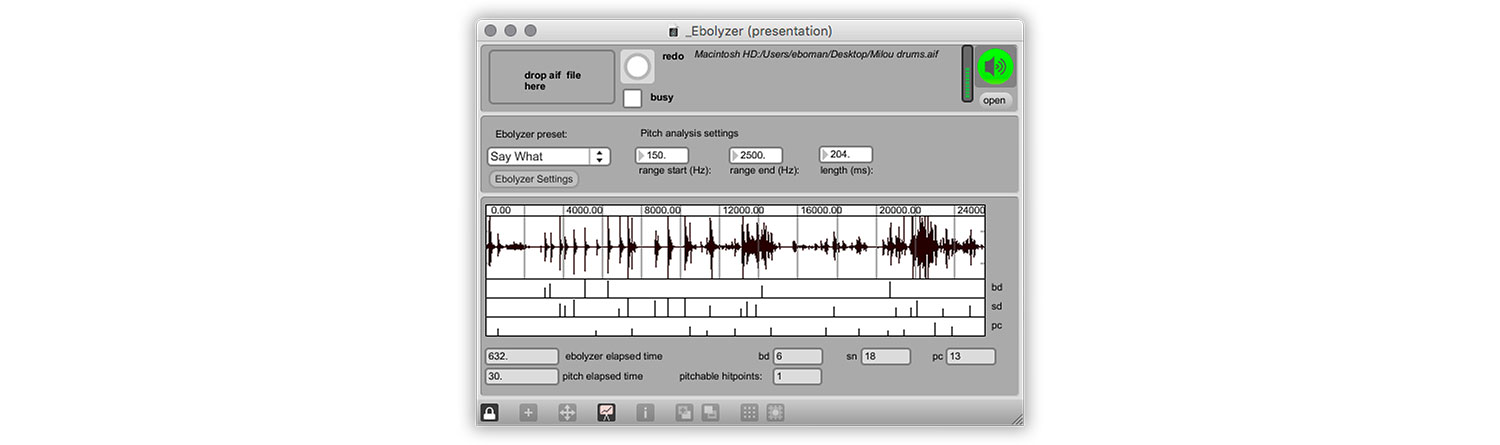

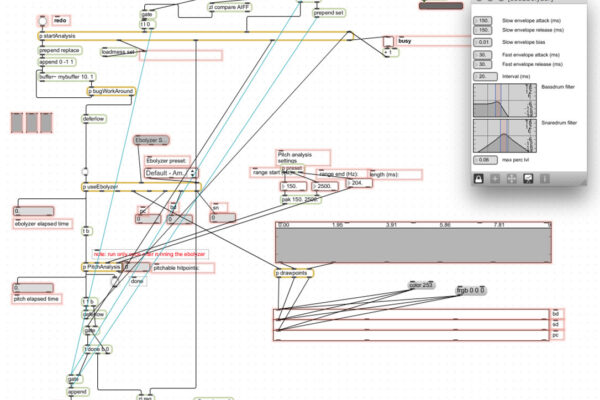

Live sampling editors

The EboLyzer analyzes audio to detect loud sounds, usable for rhythms and harmonic sounds, usable for melodies. The frequency spectrum of rhythm sounds is analyzed to determine what type of sound it is, a bassdrum-type (low frequencies), a snaredrum-type (mid frequencies) or hihat-type (high frequencies). These results are used by the AGT (Accent Grijp Technologie™) to sort and map the detected points to MIDI notes automatically, so they can be used in a live performance.

WikiVideo and HyperVideo editors

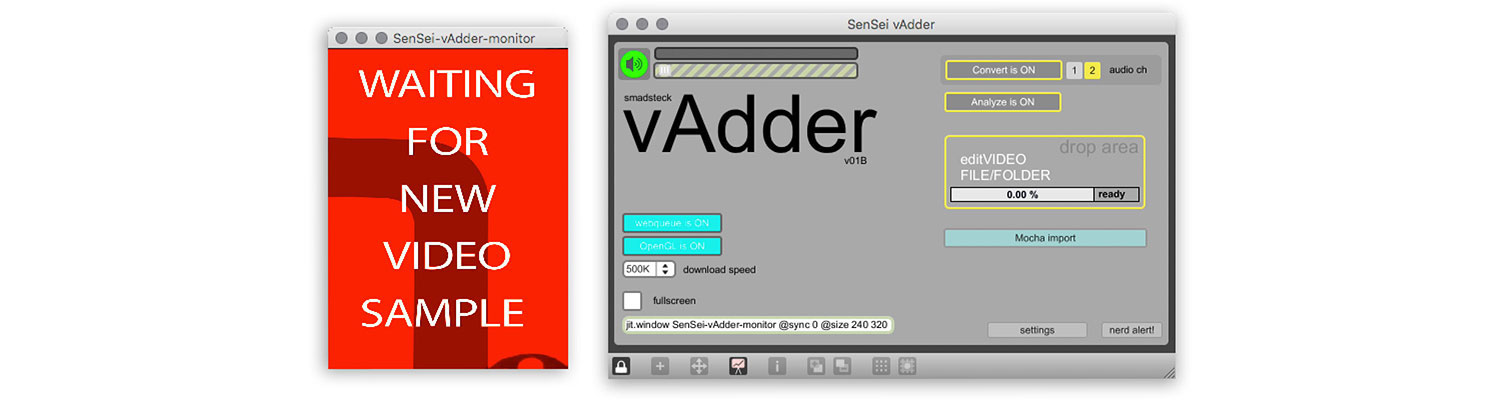

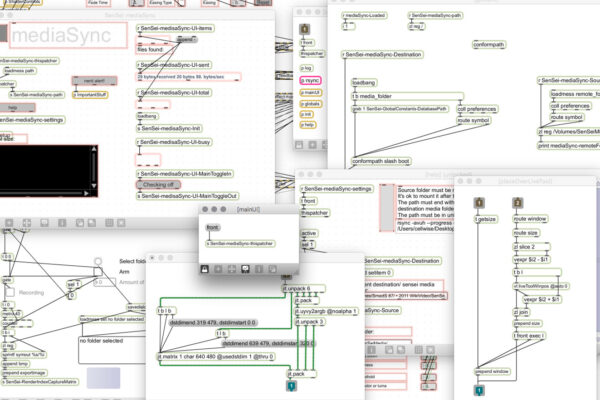

When working with video samples (‘found footage’) finding the right video samples can be very time consuming. Therefore this process often disrupts the creative process of making an audio-visual compostion. For SenSei I tried to make loading, editing and browsing video files much easier. So easy that it could be part of the creative sound and visual design process.

The Bindings Editor (see above) was therefore directly connected to a Media folder that was constantly filled with new audio-visual samples by visitors of the WikiVideo website. The Media Sync application sync-ed the media folder to the Bindings Editor, so newly added video samples could be used immediately.

The vAddr was also part of the WikiVideo project. The vAddr application monitored the WikiVideo website and downloaded video automatically when a request through the website was recieved and added the video to the media folder. This process was visible on a monitor in my studio and streamed live 24/7.

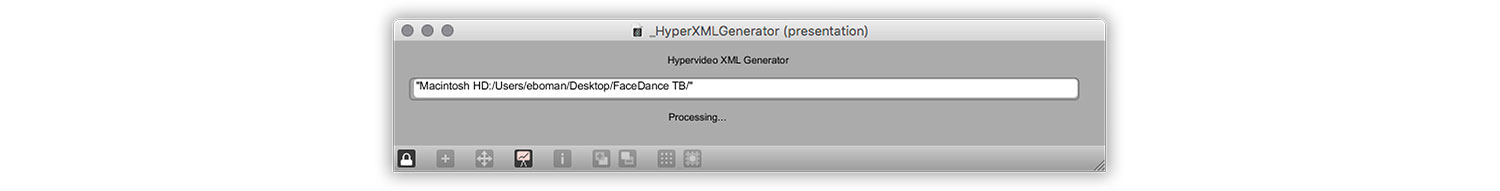

AV compositions produced with SenSei can be published as ‘Hyper Videos’. A Hyper Video is a video in which you can click on all the different elements in the video collage (videos samples and graphics). Clicking on these elements refers the user on to other places on the internet (similar to Hyper Text for text). The Hyper XML Generator was used to create the hyper layer for the Hyper Video player.

To preview the hyper layer for the Hyper Video player, SenSei can run in ‘RenderHyper’ mode. When this mode is enabled SenSei’s output window will show all composition objects and their role in the hyper layer. The hyper layer uses a low resolution, so the RenderHyper output window is much smaller than the regular output window.

Software development

History of SenSei

SenSei has a long history, starting in the 1980’s and is the result of many experiments and prototypes, like the 1995 video sampler, EboNaTor, SenSorSuit, Frame Drummer Pro, skrtZz pen, DVJ mixer, SenS I, II, III, IV and Senna.

During the development of SenSei it became clear that the time was ripe to turn this prototype into a release-worthy product. The main purpose of starting EboStudio was to create the ideal audio-visual instrument, and to make that instrument available for everybody. This led to the release of EboSuite in 2017.

Team

Produced and developed by Eboman and EboStudio

- Bas van der Graaff

- Jeroen Hofs

- Mattijs Kneppers

- Timo Rozendal

- Nenad Popov