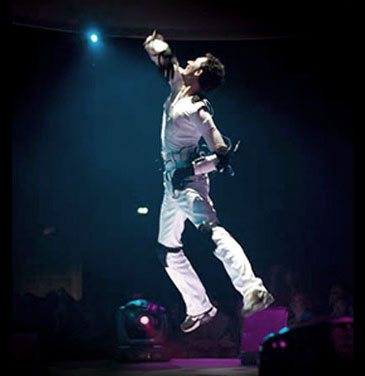

In 2011, I developed Augmented Stage for my performances. With Augmented Stage I can literally step into the audio-visual composition on the screen, walk around in this artistic, virtual world and interact with all videos and graphics live. I can walk around Michael Jackson on stage, interrupt a television show, be the drummer in a music video, jump between explosions, move objects, throw away a logo, etcetera, … the possibilities are limitless.

Art is an interface

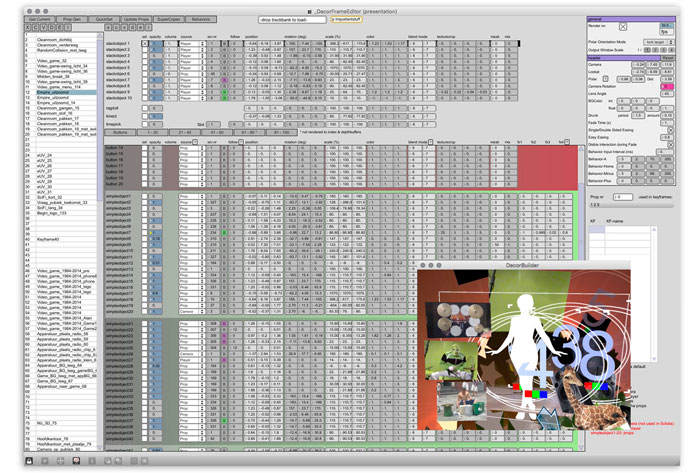

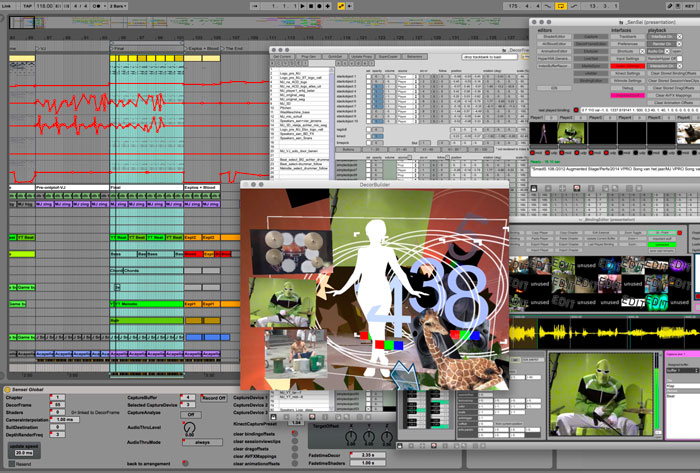

Augmented Stage also controls the software I use to run the show (SenSei and Ableton Live). When I touch the videos/graphics I can start/stop or jump across the sequence, control audio-visual effects, trigger videos, record video, etcetera.

The audio-visual composition is not only my art, but also the interface to control the show!

While doing so, the audience can follow all the action easily on the video screens. I am no longer just an audio-visual performer, hidden behind my computer, but also an actor playing a part in my compositions live.

An interactive artistic world

We updated SenSei’s 3D video mix and real-time motion graphics software to make videos and graphics interactive. Interactive ‘behaviours’ are attached to the videos and graphics. When a graphic or video is touched by the performer, this behaviour is triggered and executed.

Touching a graphic might cause it to fall down, play a video, distort video, jump to another part of the performance, change a rhythm or melody, start a video recording, change the video mix or an effect, etcetera. I can also grab videos and graphics and move them around

3D camera

For these performances I use the Kinect 3D camera. This camera is developed for the Xbox game console by Microsoft, but its data can be ‘hacked’ and used for other purposes. A computer analyses the Kinect data, removes the background from the Kinect video stream and tracks 24 points on the body and sends this to the Augmented Stage computer. There the Kinect video stream is mixed with other graphics and visuals into a virtual 3D space. Thanks to the 24 tracking points it knows where the body, hands, legs, arms, head etc. in this 3D space are.

Presentation

Augmented Stage is great for performances/shows, but is also very useful to do a presentation/talk. A bit like Microsoft’s PowerPoint or Apple’s KeyNote, but then on steroids. Instead of just showing slides with information, you can walk into the slide and interact with the subject you are talking about! This video shows a brief talk I did about my work and Augmented Stage in 2014.

Showcase

The videos below show a short performance I did with Augmented Stage in 2014. The videos on the right show the performance, the videos on the left show how SenSei and Ableton Live were used during this performance. The videos further down this page show different uses and concepts of Augmented Stage.

Walking around in audio-visuals

Because 24 points on the body are tracked in 3D space constantly, SenSei knows the position of the performer exactly. This makes it possible to mix the performer with the videos and graphics in the 3D composition. The performer can walk between the videos and graphics and be part of this artistic, virtual world. In this example I walk around Michael Jackson and even crawl between his legs!

Audio-visual effects

In the example below I control audio-visual effects live with Augmented Stage. I just walk to Michael Jackson and grab him by his hair with my left hand (this turns on the effect) and distort and move him around (position and rotation of the arm control the effects). When I touch his hair with my right hand I switch to a different effect. Bending my knees adds another effect to finish the scene off and build up a climax to the next scene.

Live video recording

A great feature of SenSei is de ability to record videos live and use them in the show directly. Since the addition of Augmented Stage it is also possible to record videos with the 3D camera. Super cool, because these recordings have no background, so the recorded persons can be mixed with the other visuals in a much nicer way!

Touching and moving graphics

The 24 tracked points on the body can also be used to move graphics and visuals in the virtual 3D space. Touching a graphic will make it stick to a hand, foot or head, for example, so it moves with the performer. Touching another graphic disables the stickiness, so the graphic will stay in its new place. To make dragging more natural, audio-visual effects are controlled by the position of the arm to make the graphic rotate and change colour (simulating shadow).

Control Ableton Live

Augmented Stage is also used to control Ableton Live, so the performer has full control over the timing of the show. In this example I push away a car, which starts the next scene (make Ableton Live jump to another ‘Locator’).

Interactive graphics

Graphics with an interactive behaviour attached to them can act as buttons. In the example below I trigger different beats and choose different samples to play the melody. A fun way to do a performance.

Trigger animations

Interactive behaviours can also trigger an ‘animation’. These animations animate the position, rotation, size, transparency or colours of the graphic. Using animations I can make a graphic fall down when I touch it for example. MIDI notes coming from Ableton Live can also trigger interactive behaviours. In this example MIDI notes coming from Ableton Live trigger animations that change the size and colours of the lights to create a dynamic light show on the music.

Game

You can create complex interactive scenes when you combine different interactive behaviours on multiple graphics and videos. In this example I created a video game. Animations triggered by MIDI notes make graphics fly around. When I touch a graphic, videos are triggered to make the graphic explode and show how many points I won. If graphics don’t touch my hand, but hit another part of my body, other videos are triggered to make the graphic explode in another way and show a negative score. When I touch the drummer, he will fall of stage and the beat is turned off. When I move to the right side and touch the speakers in the corner, Michael Jackson starts singing again and I can move him around.

I like this hybrid of performance, show control and gaming. A new type of performance with a lot of future potential.

Motion tracking

In the example below, one of the 24 tracking points is used to make blood splat out of my body, as if I got shot by the Terminator in the background. I can move around as much as I can, SenSei will not loose me and hit me every time!

Actor

In this video I play around with one of the characters in the composition. I like that I am more than just a guy mixing videos, but I am an actual performer participating in the scene, interacting with the virtual characters. It makes my presence on stage more interesting.

Complex scenes

In the next example I participate in a famous scene from the movie ‘Star Wars’ and perform video beats in an new, innovative way. Then I mix Star Wars with a Kung Fu movie. But not by simply cross-fading from one to the next. I mix them in a way that is only possible with Augmented Stage! I really like this concept. Nile Rodgers doesn’t apparently.

ASML show

Augmented Stage is also very useful for doing presentations/talks. Like Microsoft’s PowerPoint or Apple’s KeyNote, but on steroids. The presenter is able to step into the screen and interact with the subject he/she is talking about. Literally pointing out important elements and jumping from one subject to another. In 2014 I produced a presentation for ASML with Augmented Stage about their history. Below are two scenes from this presentation. You can watch the whole presentation and how Augmented Stage was used on the ASML presentation project page.

Steppenwolf

In 2016, Schauspielhaus Bochum asked me to design the music and video projections for their theater production ‘Der Steppenwolf’. Der Steppenwolf is based upon the famous same-titled novel by Hermann Hesse and was directed by Paul Koek. For the famous ‘Magic Theater’ scene I used Augmented Stage. This way, I was able to make this dream world come alive and let the actors interact with this virtual world and with each other. Read more about this performance on the Steppenwolf project page.

Presentation at Zapp TV

In 2012, Z@pp (a Dutch TV show for children) invited me to do a presentation with Augmented Stage. I also did a live sample performance with the audience, but that part is not uploaded to YouTube.

But what about the SenSorSuit?

The SenSorSuit is also a great way to control SenSei and Ableton Live. A big advantage of the SenSorSuit (SSS) over Augmented Stage is that the SSS data has no latency, so it is perfect for live improvisation and jamming with other artists. Augmented Stage has a significant latency (around 200 milli-seconds), which makes it hard to do video scratching or rhythmic/melodic triggering. Another advantage of the SSS over Augmented Stage is that the SSS has a nice visual appeal on stage. The audience is impressed by the wires and sensors of the suit. The Augmented Stage technology is invisible (except for the camera on stage), so it doesn’t impress in that way.

A big issue with the SSS though, is that the audience doesn’t understand what it is I am doing on stage. It looks cool, but it is hard to tell what actions the body movements control on the screen. With Augmented Stage that is very clear: if I distort Michael Jackson, the audience watches me walking over to him, grab him by his hair and throwing him around.

Another great advantage of Augmented Stage over the SSS is that anybody can step in front of the camera and participate. No suit is needed. That makes it very flexible. I used to do many corporate events with the SenSorSuit, where somebody would join me on stage, a co-presenter or guest. With Augmented Stage they can actually participate. For example, my performance can go seamlessly into the presentation of a product by the managing director or the presentation of an award to the winner by the head of the jury.

The history of Augmented Stage

Augmented Stage and SenSei have a long history, starting in the 1980’s and is the result of many experiments and prototypes, like the 1995 video sampler, EboNaTor, SenSorSuit, Frame Drummer Pro, skrtZz pen, DVJ mixer, SenS I, II, III, IV and Senna.

During the development of Augmented Stage and SenSei it became clear that the time was ripe to turn this prototype into a release-worthy product. The main purpose of starting EboStudio was to create the ideal audio-visual instrument, and to make that instrument available for everybody. This led to the release of EboSuite in 2017.