I have been using live recordings of the audience in my live shows since 2004. Combined with the SenSorSuit this concept was a great success. I did many live sampling shows on a very diverse range of stages. At music festivals ofcourse, but also event openings, product presentations, award shows, theater shows, conferences, branding events, you name it.

Live experience

Live sampling is a great way to involve the audience into a performance. It is fun, but also shows that what is happening on stage is really happening live. This makes the audience much more appreciative and enthusiastic.

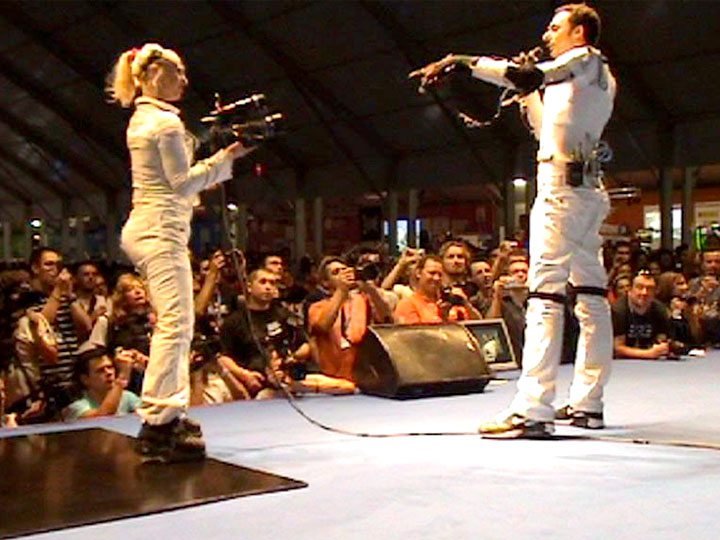

With the SenSorSuit I can step from behind my computer, walk to the audience and engage them even more.

Example

At the LowLands festival I did a live video sample performance in 2008 with my AV instrument, then called SenS IV. I did three performances on Saturday and Sunday at the festival’s chill area, sampling people hanging around there and creating my own little party. Mascha Rutten joined me on stage to film (she has been filming during my shows since 1996). The videos below show how I used SenS IV, the SenSorSuit and Logic Pro (music software) for that performance.

Software

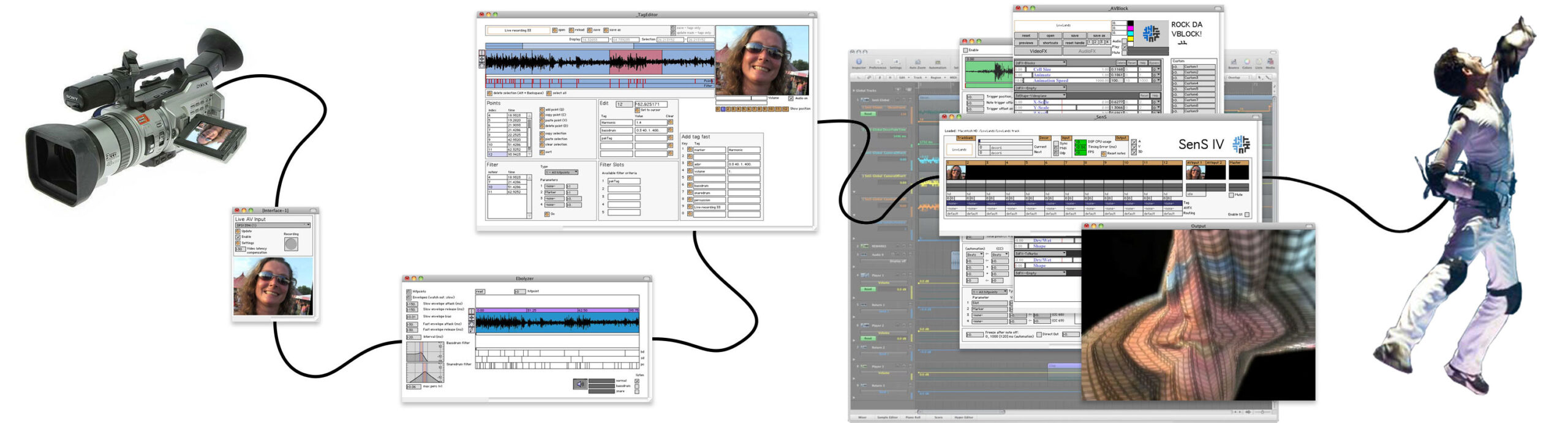

The live sampling software worked like this: I recorded the audience on the fly with my SenSorSuit or automatically with Logic Pro with a recording app. This recording was then sent to an analyser that made a guess for the best sample. This sample was automatically loaded into SenS IV and the ‘Tag Editor’ to check the sample manually and add extra information (e.g. center of a face). With my SenSorSuit I controlled the audio-visual effects, triggered the sample and fine-tuned the meta-data.

Audio-visual effects

For my live sample shows I used SenS IV’s real-time 3D audio-visual effects to morph voices into basslines, drum sounds, melodies and crazy sound effects while the faces turned into abstract visuals.

HeeYaa (a remix of ‘Hey Ya!’ by Outkast) is a good example of this. All sounds and visuals you hear and see are live recorded voices and faces morphed into new visuals and sounds. These effects are custom-made for SenS IV. Only a bassdrum and snaredrum are added to give the track a more solid base.

Real-time motion graphics

Another feature that contributed to the success of the live sample concept was the new ‘real-time motion graphics software‘ we made for SenS IV. This software gave me much more freedom to mix and blend videos and add graphics, live camera streams and 3D shapes. In 2008 I did a performance to launch the website for the Stranger Festival video remix contest. I used this software for the first time then, so the designs were pretty simple still. For this performance Guillermo joined me on the video drum kit.

In september 2008 the rendering of the 3D video mix and real-time motion graphics was moved from the CPU to the GPU (a dedicated graphics processor in a computer). This gave me more freedom to use more and bigger graphics, use more complex ‘Decor Frames’ (composition presets), more 3D audio-visual effects and wilder virtual camera paths. I used this new version of SenS IV for the first time for a performance for Sony PlayStation.

More examples

In 2011 we build the successor of SenS IV: SenSei. We expanded the video mix and real-time motion graphics capabilities (and we made it interactive!). With SenSei I did also many live video sample performances, like for LEFestival. For this performance I used a Kinect 3D camera to film the audience, that is why they are cutted out so nicely.

For Ray Ban I did a few live video sample performances with SenS IV on Queensday 2009 in Amsterdam. With the real time motion graphic engine I made crazy, sleazy Queensday designs for this performance.

For the Dutch Film Museum I created a track with movie fragments from their archive, called Celluloid Remix. At the EXIT I played this track live in 2009. I like the 3D skrtZz solo with the airplane. Live I also used live recorded samples from the crowd.

For Dutch children TV Show De Buitendienst I produced the ‘Haai aLarm!‘ installation using the live sample concept at the Zapp Your Planet festival, a fundraising event for the endangered shark population. Children made two sounds in front of a 3D camera: an ‘alert’-sound and shout ‘Haai alarm!’ (‘Shark alert!’). Once they recorded their sounds, their face and voice were immediately scratched and manipulated in a fun way. The end result, a personalised shark video, could easily be shared on social media.

The beginnings

For my first live video sample performances in 2004, I used my Frame Drummer Pro and SenS I software. In 2007, SenS IV was optimized for live sampling, with dedicated editors and monitors and support for live audio-visual 3D effects and real time motion graphics. Besides that, SenS IV was (almost) crash-proof, so I dared to take more risks on stage. The videos below show tests in the studio with the Frame Drummer Pro together with Aart en Thijs (right and middle) and a live capture performance with SenS II in 2005, sampling and skrtZz-ing the audience and using composition assistance technology to make beats with my body motion (left).

Demonstrations

In 2008, Dutch TV show Klokhuis made an item about Eboman. In that item I showed the SenSorSuit and the live video sample concept.

I gave a demonstration of the SenSorSuit and the live sampling concept during a live sampling performance I did at EXIT festival in Serbia in 2009.

More examples

For a performance for Creative Learning Lab in Amsterdam (2008) Guillermo joined me on the video drum kit. Before the show, we sampled many people at the entrance of the venue. When sampled, I skrtZz-ed the recordings directly live on some funk music with my SenSorSuit (see video on the left). Later that day we did a performance with these recordings (video on the right).

I produced a live sampling show in 2008 for the opening of a new ciltural centre in city Haarlemmermeer, containing a library, art museum ‘Pier K’, concert hall ‘Pophart’ and theatre ‘De Meerse’. Guillermo and Glen did this performance for me, with automated video skrtZzes, because I was in Brazil doing a live sampling tour.

Interactive tracks

This concept is closely related to the Interactive Tracks project. Interactive Tracks are interactive audio-visual compositions (iOS apps) with integrated creative functions to remix and personalize them. Tips and instructions, integrated in the track, help the user during this process. The resulting personalized track can easily be shared on social media. Read more about that project on the project page.