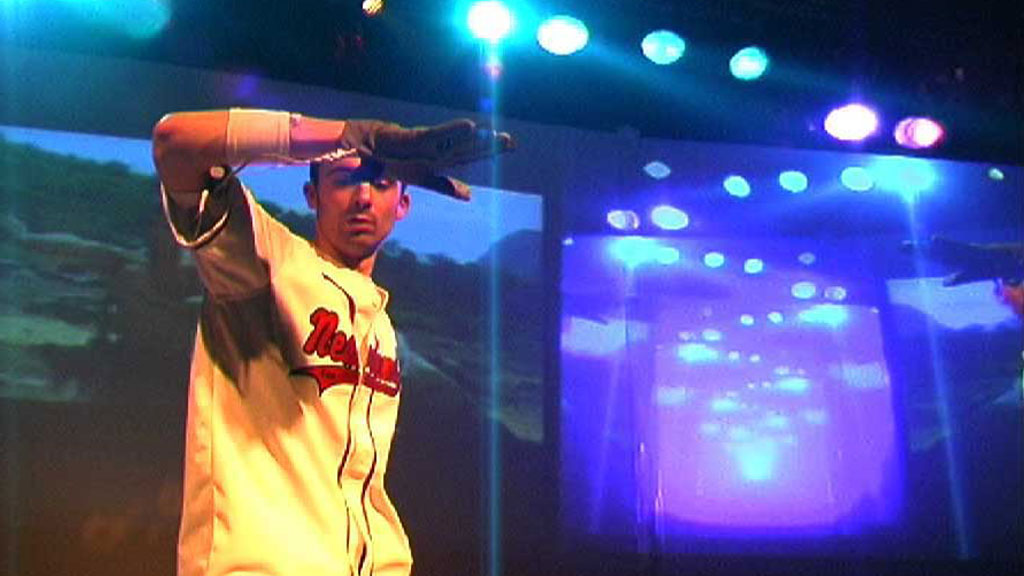

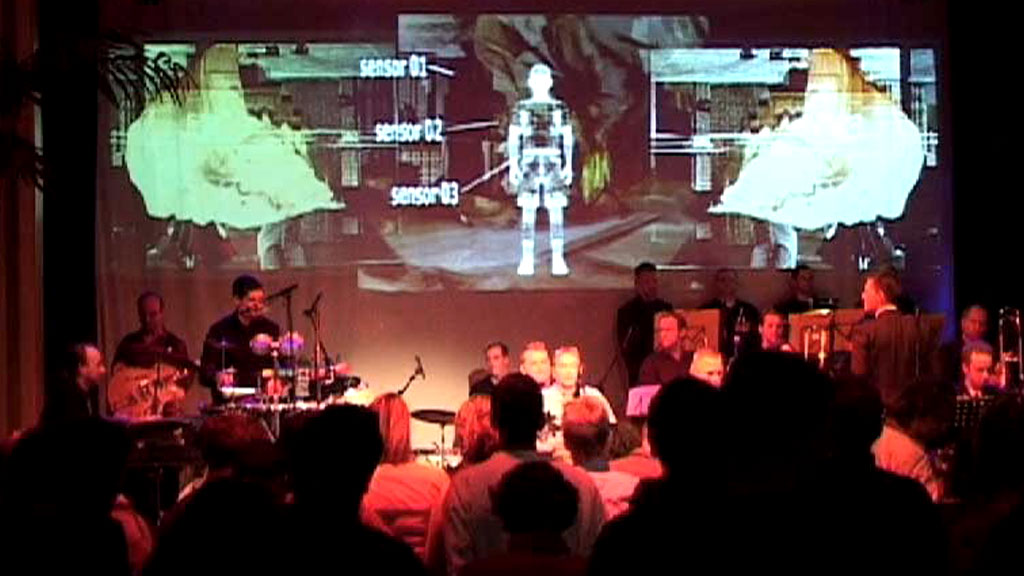

In 1999 I developed my first SenSorSuit. A body motion tracking suit to control my live shows. The sensors are made of door hinges with mounted potentiometers. I build this suit and the sensor-to-MIDI translator with my father and Chris Heijens.

Connecting to the audience

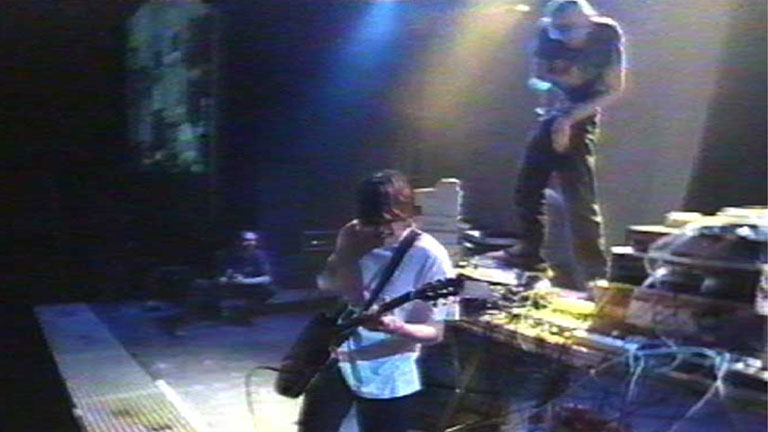

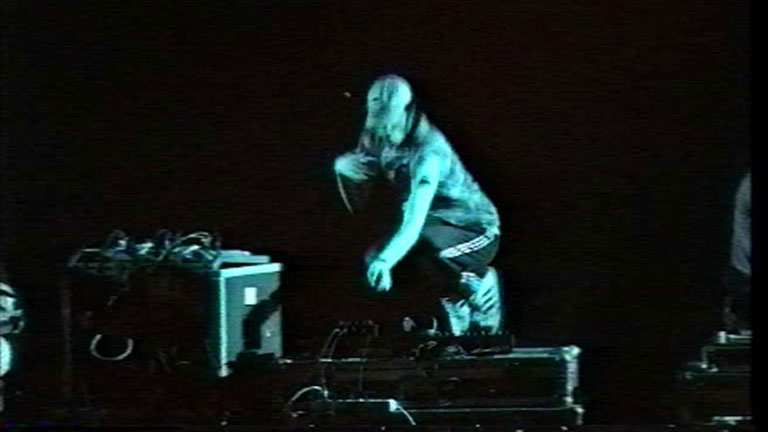

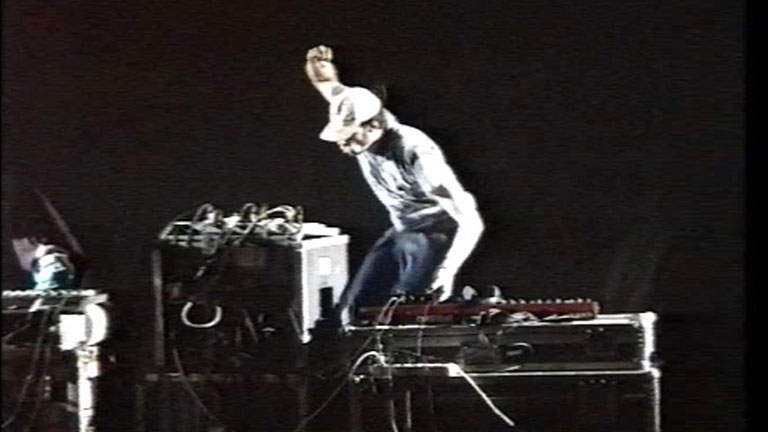

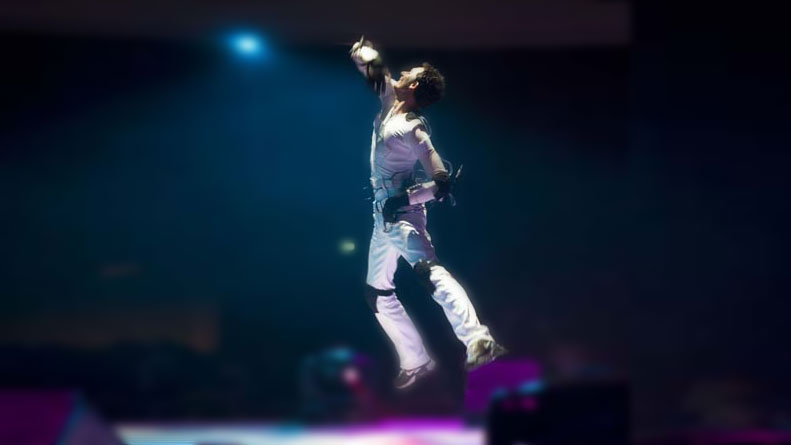

During my live shows I used to jump around, stand on my table and run to the front of the stage to interact with the crowd. I was jealous of the musicians in my band, because they could jump around and still play their instruments. I was stuck behind a big heap of heavy gear.

So I decided to create a new interface to control my show: a motion tracking suit. That would allow me to jump around and use this energy to create my tracks live. A suit like this didn’t exist, so I had to build it myself.

SenSorSuit

I went to the local hardware shop and bought a few metal door hinges. My father mounted potentiometers to these hinges, to translate the angle of the hinge into an electrical signal. With Chris Heijens I created a small computer to convert this signal into MIDI (a very common signal to control music software) and to prepare this MIDI signal so I can easily use it with MIDI software.

At a theater costume shop I bought a ballet suit and mounted the hinges in my armpits and elbows.

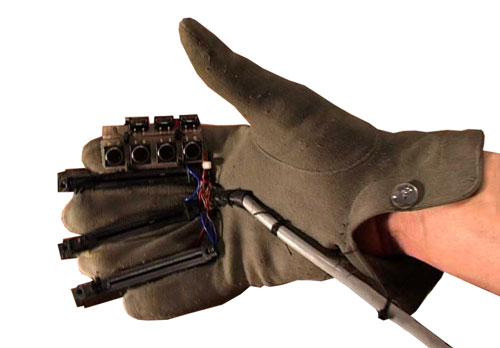

In an electronic shop I bought buttons and DJ mixer faders. With my father I mounted these faders and buttons into a pair of gloves. We used my father’s wedding gloves, because they had the right solid texture.

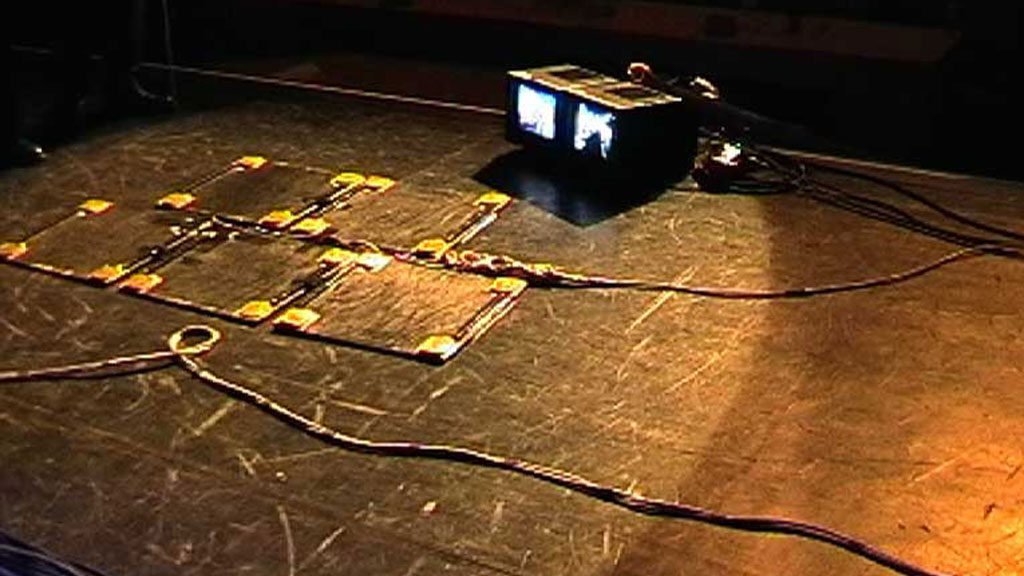

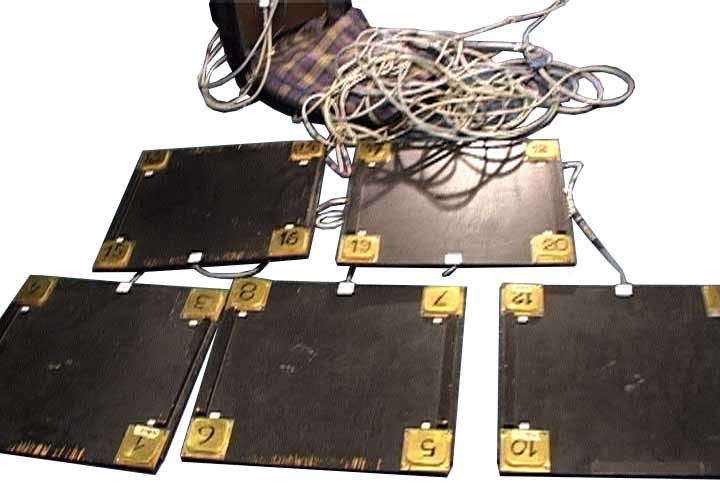

To select samples and to make program changes, my father and me created floor panels with home made buttons. This allowed me to quickly load a new sample or jump to another track, while playing with video samples live.

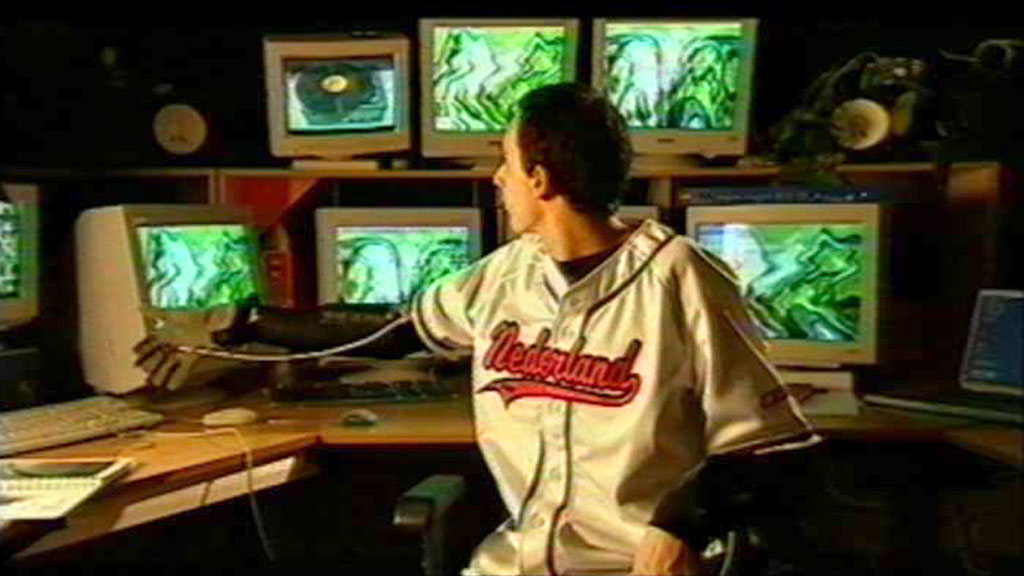

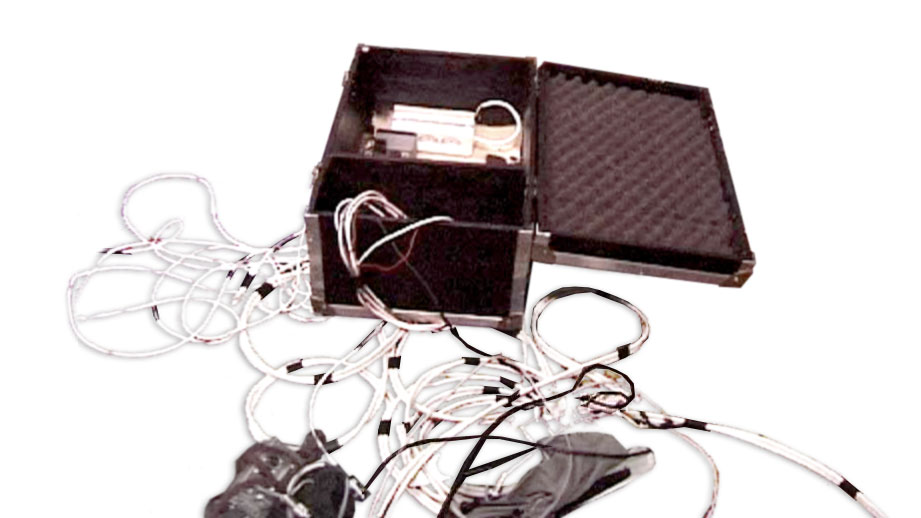

Big cables connected the door hinges to each other and the gloves and to the small computer. This computer was build in a solid wooden box. From this wooden box the MIDI signal went to my computers running audio-visual software (Image/ine).

The box also contained an audio-mixer. The sound of my audio-visual computer went into the box as well and mixed using two faders on my gloves. I don’t have a recording of the live show, just a recording of the computer output.

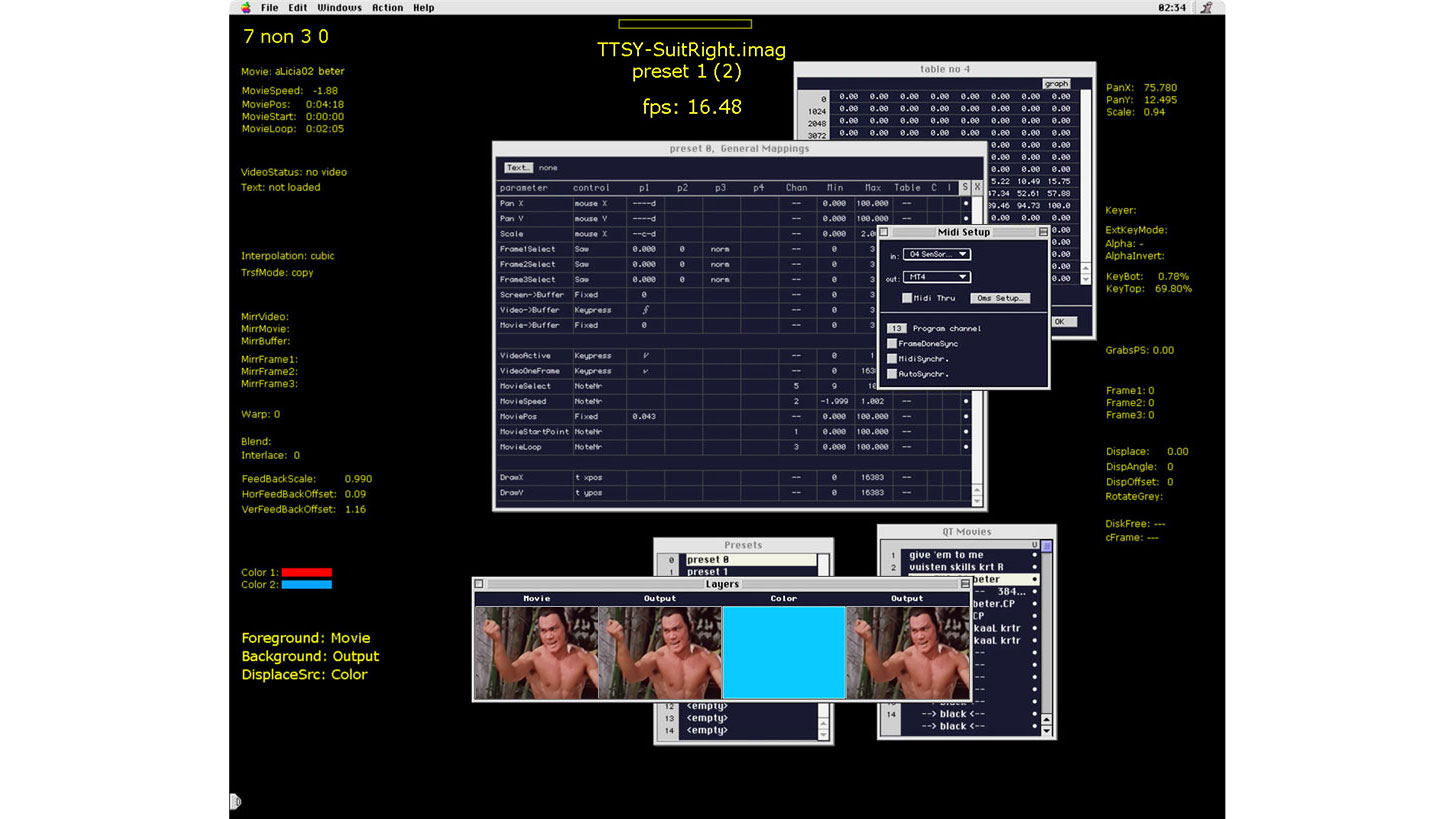

Software

Image/ine is innovative real-time video software developed by STEIM. It allowed me to map MIDI to pitch, trigger and jump through video. I used two computers running Image/ine, one for each arm. This set-up had serious latency issues. Especially when triggering video, the system responded late, with a latency of more than 200 milli-seconds. To solve this, I used another way to stop/start (trigger) videos: I made the videos loop constantly. To stop them I quickly made this loop very short, so it would play only one frame. The video seemed to pause, but was actually playing, constantly looping the same frame. This kept the video buffered and made the system respond much quicker when I restarted the video (made the loop big again).

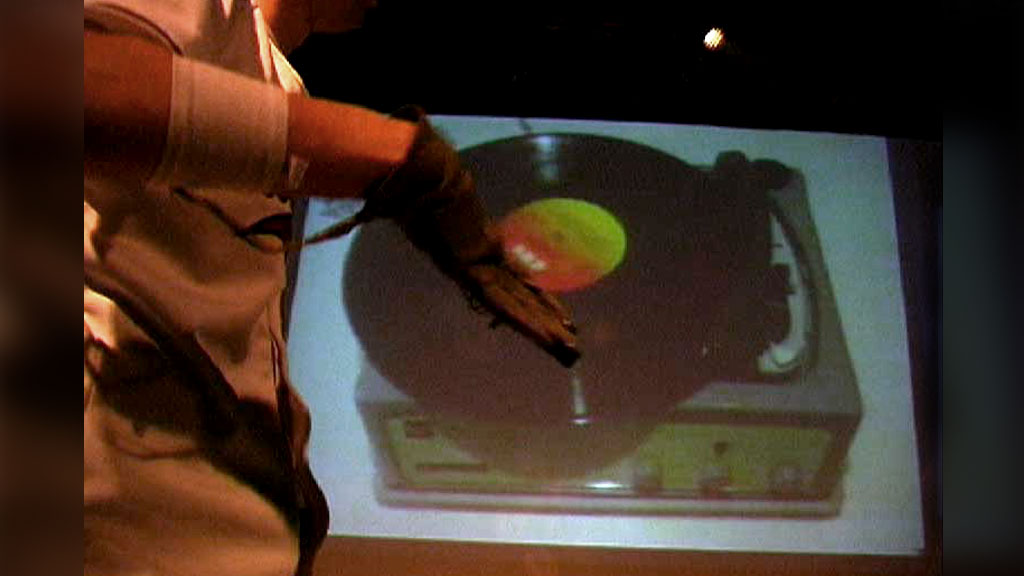

I used the fader between my right index and middle finger to trigger a video (make the loop small/large). The small computer mapped the output of the this fader to MIDI in such a way, that a small change would make the loop very small/large. Often I rendered a black frame at the beginning of a sample so I could turn the video off (make it black) by going quickly to the beginning (closing the door hinge in my armpit) and making the loop one frame (closing the fader). There was a button on the glove that did this as well, but I used the fader mostly, because I usually combined this technique with glitchy loop effects.

The video below shows how Image/ine was used during a live performance. The animating numbers are the MIDI data coming and the mapped video parameters responding. It also shows some other Image/ine window, like the video database, the MIDI map window and the table window, used to fine-tune the MIDI mapping.

‘Real-time’ audio-visual effects

Image/ine even supported real-time effects, but these effects were pretty basic, worked only on the visual track and were too heavy for my computers when I was doing complex video triggering. Therefore I used audio-visual samples with pre-rendered audio-visual effects. Playing with the timing of these video allowed me to play live with the timing of the effects.

I made sure the pre-rendered AVFX in the sample had a simple, logical development over time, starting with no effect and then adding more effects as time progresses. This way, I could easily move from a ‘clean’ visual/sound to the distorted visual/sound, resulting in smooth, natural sounding/looking audio-visual effect solo.

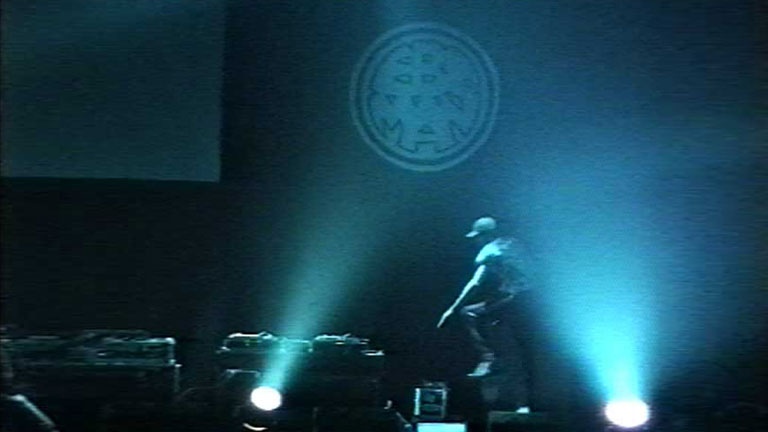

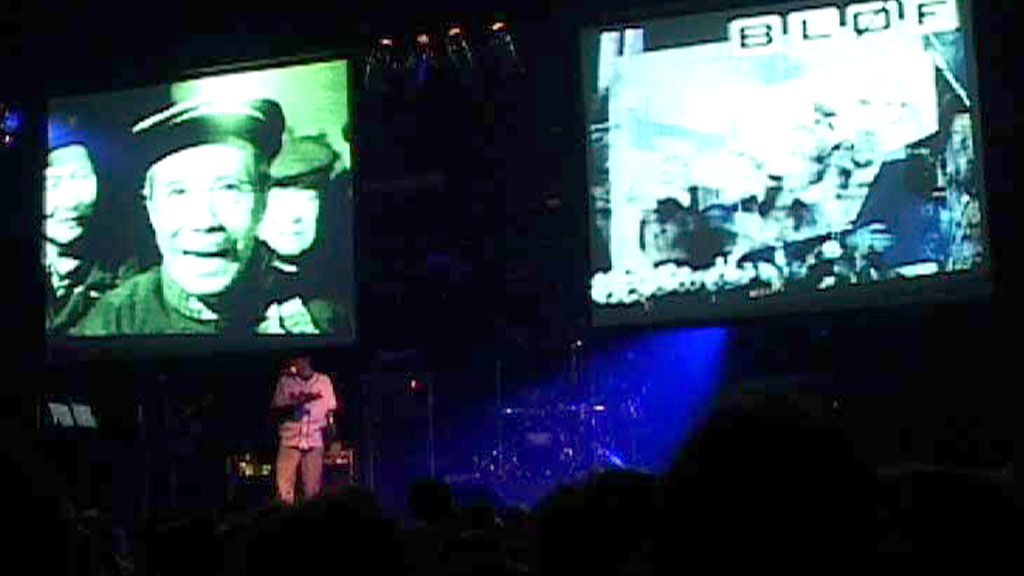

Performances

I used the SenSorSuit for many live shows. I also used it for the radio scene of d DriVer must be a MAdMaN movie.

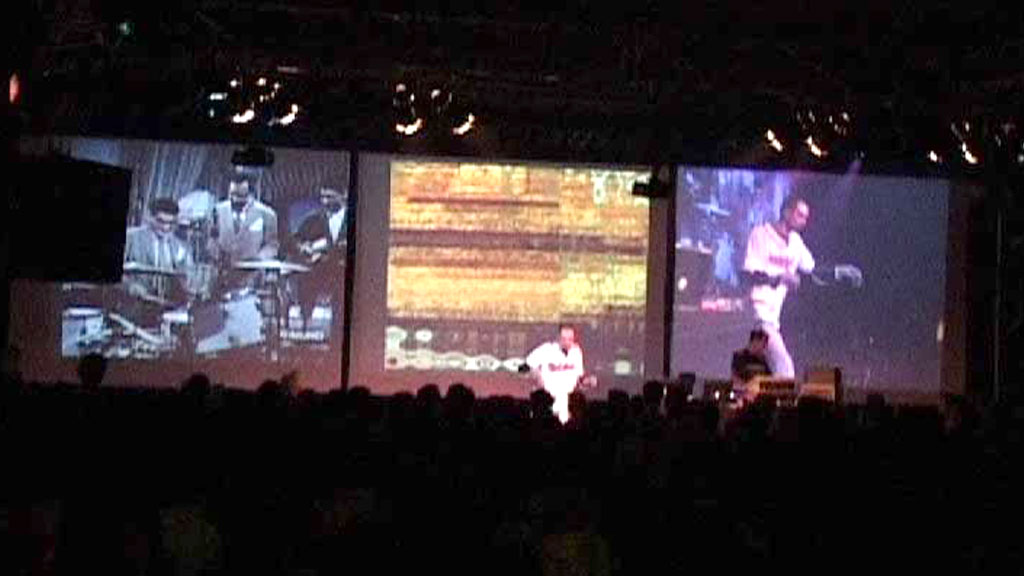

In the summer of 2000 I did a performance with my SenSorSuit for the official opening of the quinquennial maritime event SAIL in Amsterdam, the Netherlands. This was the first time I did a tailor made performance for a commercial brand, DutchTone in this case (a telecom provider).

For this performance I remixed a few Eboman tracks to fit with the theme of SAIL. I added maritime video samples that I filmed in the harbor of Amsterdam and took from movies. I experimented with audio-visual effects, skrtZz-ing and simple storytelling.

Writing tracks

I also used the SenSorSuit to write tracks. I produced many DVJ 2.0 tracks with the SenSorSuit. I recorded skrtZz solos and then edited these solos to create new tracks. The videos below show two examples. The videos on the left are the raw skrtZz recordings and the videos on the right the compositions that include these solos. On the DVJ 2.0 project page you’ll find more examples.

Portable

I used the SenSorSuit for many performances all over the world. To make it easier to travel with the SenSorSuit I moved the small computer and audio mixer from the box into a traveling bag. This bag also carried the laptops, the cables and the SenSorSuit. So the whole set-up fitted in one bag (except floor panels) and I could carry it as hand luggage on a plane.

The video below shows me and Chris working on the travelling bag in my studio.

In 2001, two weeks after 9/11, I went to Canada for a performance at the Montreal Film Festival. I was a bit worried how the border police would react to my crazy bomb looking hand luggage when they put it through their scanner. They were confused and gathered around my SenSorSuit. A lot of discussion and they ran the case through their scanner two times. Oops, I got a bit more worried.

I got a bit nervous when they asked me to come and have a look at my luggage. I opened the case and showed the wires and stuff and tried to explain about the skrtZz technique, audio-visual effects and the SenSorSuit, but they were not interested. The wanted me to remove the bottom plate to show the small computer. I removed it and showed what was underneath. That was a surprise for me too: a dead mouse. They started laughing and showed the dead mouse on their scanner. The crazy suit didn’t impress them at all, but the dead mouse was a good laugh for them. “You are not supposed to bring animals into the country”, they said, “or dried meat.”

SenSorSuit 2.0

In 2004 I improved this SenSorSuit and created SenSorSuit 2.0. Again with help from my father and Chris Heijens. This time I used light flex sensors and touch sensitive sensors.

Team

Produced and developed by:

- Harry Hofs

- Jeroen Hofs

- Chris Heijens