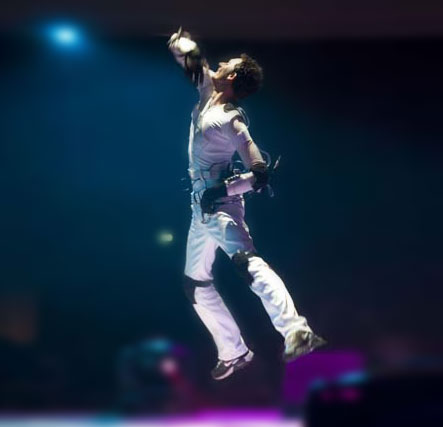

In 2004 I upgraded the SenSorSuit that I had developed in 1999 to control my shows with my body motion. The new suit consists of flex sensors on knees, waist, elbow, shoulders and wrists, buttons on my arms and gloves and shoes with touch sensitive sensors. This is a great interface for live performances that I am using up to this day.

The SenSorSuit I created in 1999 was great, but had some serious issues. The metal door hinges on my joints made noise and often my skin would get caught into them, so a performance woud literally cost me blood, sweat and tears. Also, the heavy cables that connected me to the computer translating the sensor data into MIDI were inconvenient and limited my freedom to move around on stage.

Sensors

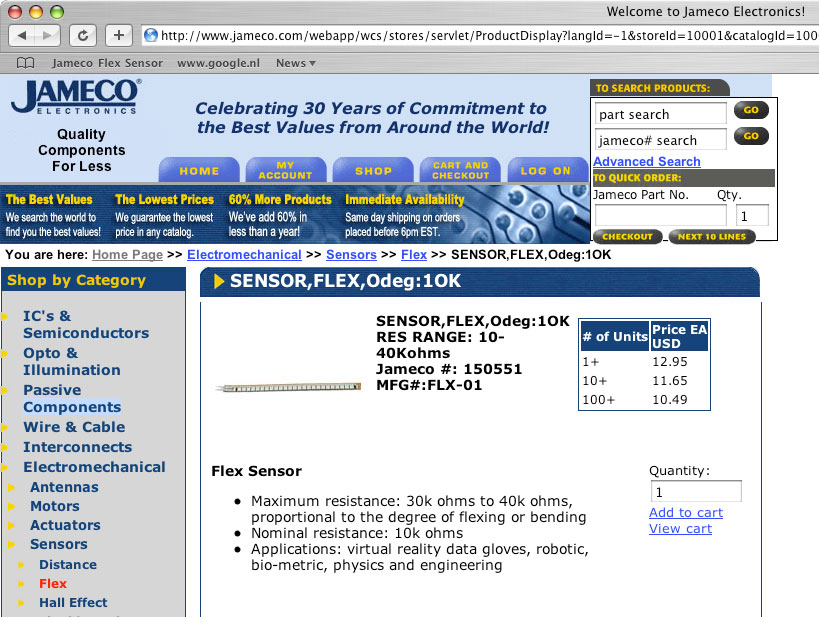

So, in the summer of 2004 I upgraded the SenSorSuit concept. After some research I found very light flex sensors. These are used to measure the amount of deflection or bending. Usually, these sensors are used to measure the bending or stretching of a surface. They appeared to work very well to measure the bending of human joints. These sensors are very sensitive, so they generate a lot of data, even with the slightest bending. Therefore noise filtering was needed to make the signal useful.

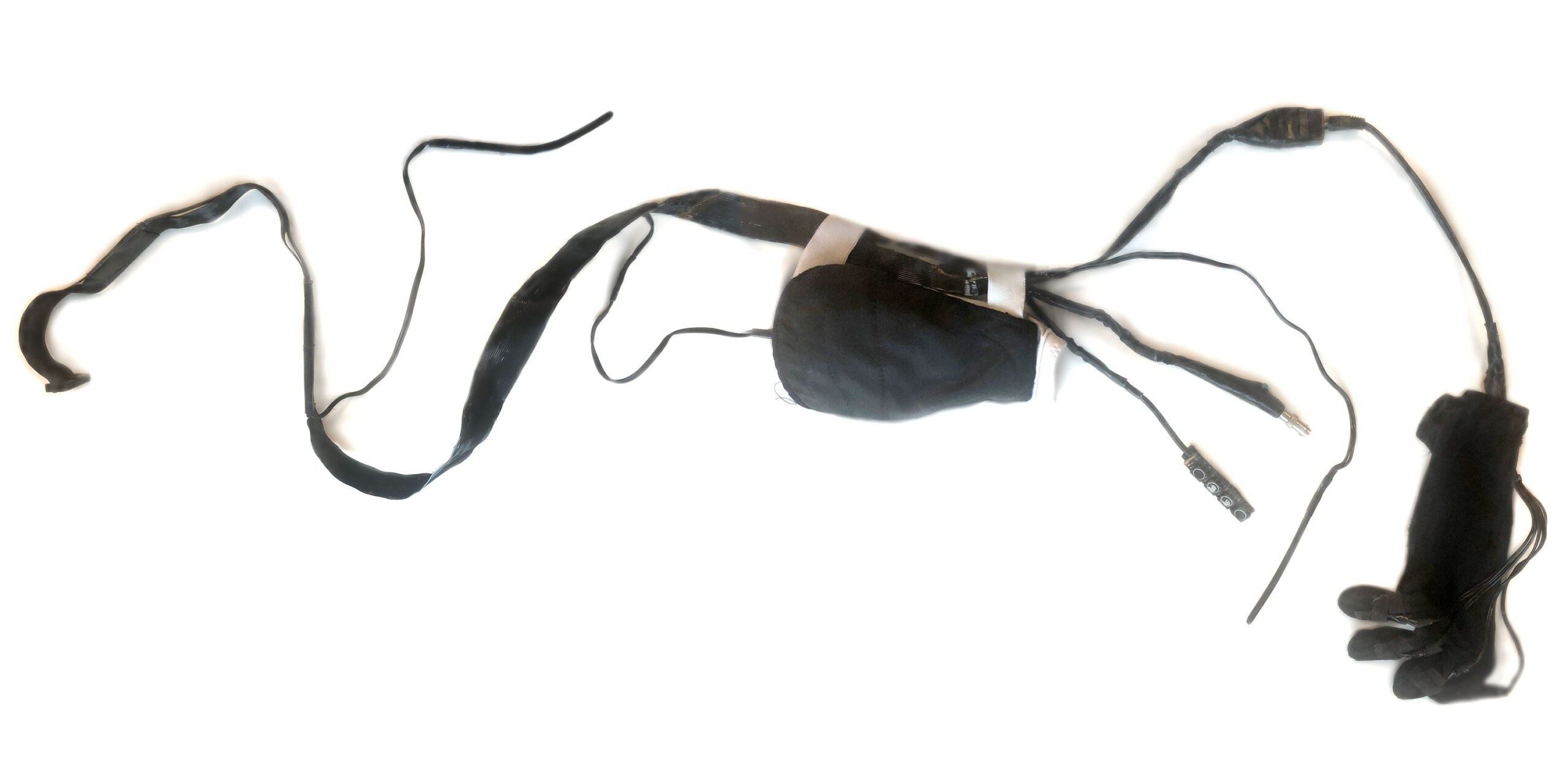

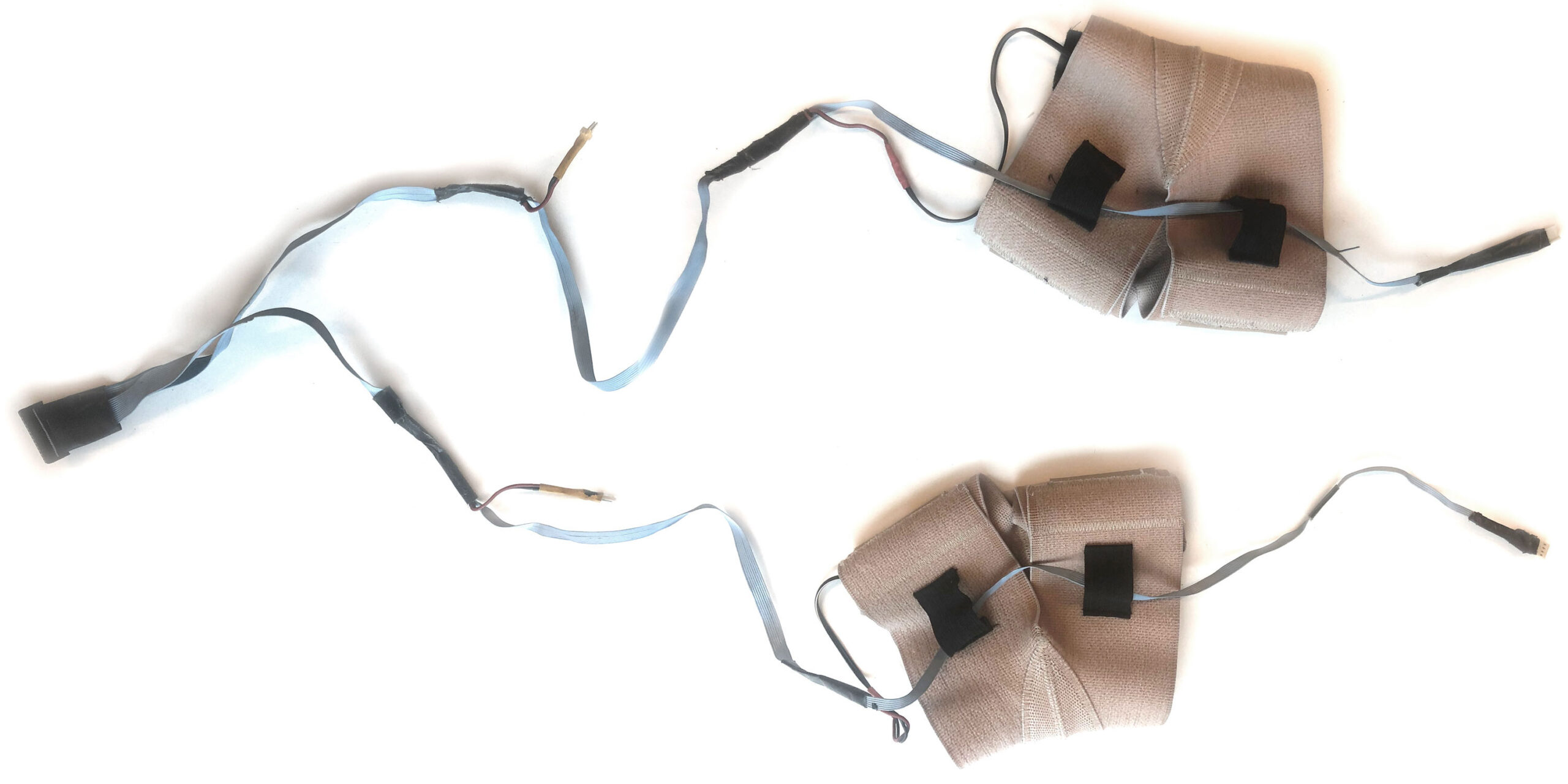

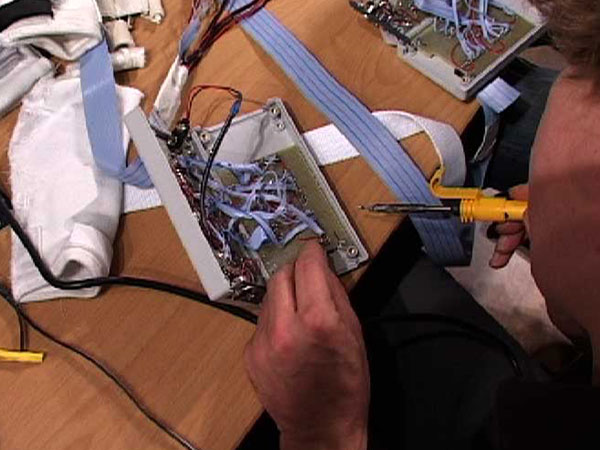

My father helped with the production of the sensor cables. I expected the sensors to get damaged quickly, so I initially attatched plugs to the sensors, so they could easily be replaced. But the sensors appeared very reliable after we strengthened them with a plastic base. So my father finally built the sensor cables in one piece with the sensors attached for the armpits, elbows and wrists. Besides that, four buttons and a button to calibrate the suit are attached to each arm.

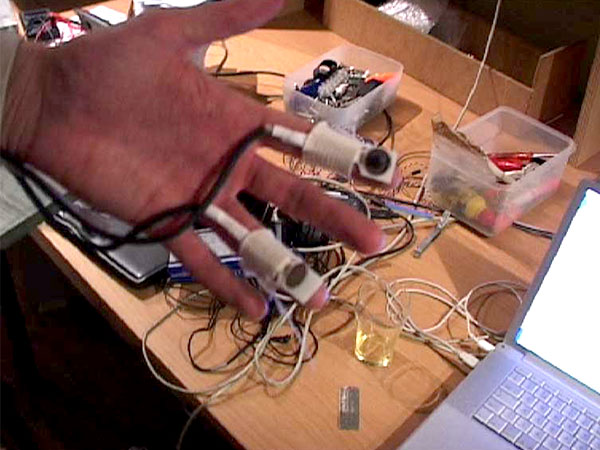

Touch sensitive sensors mounted to gloves are used to send MIDI triggers and velocity data. These sensors are also mounted to soles to send foot pressure, but I don’t really use these soles much.

Converter

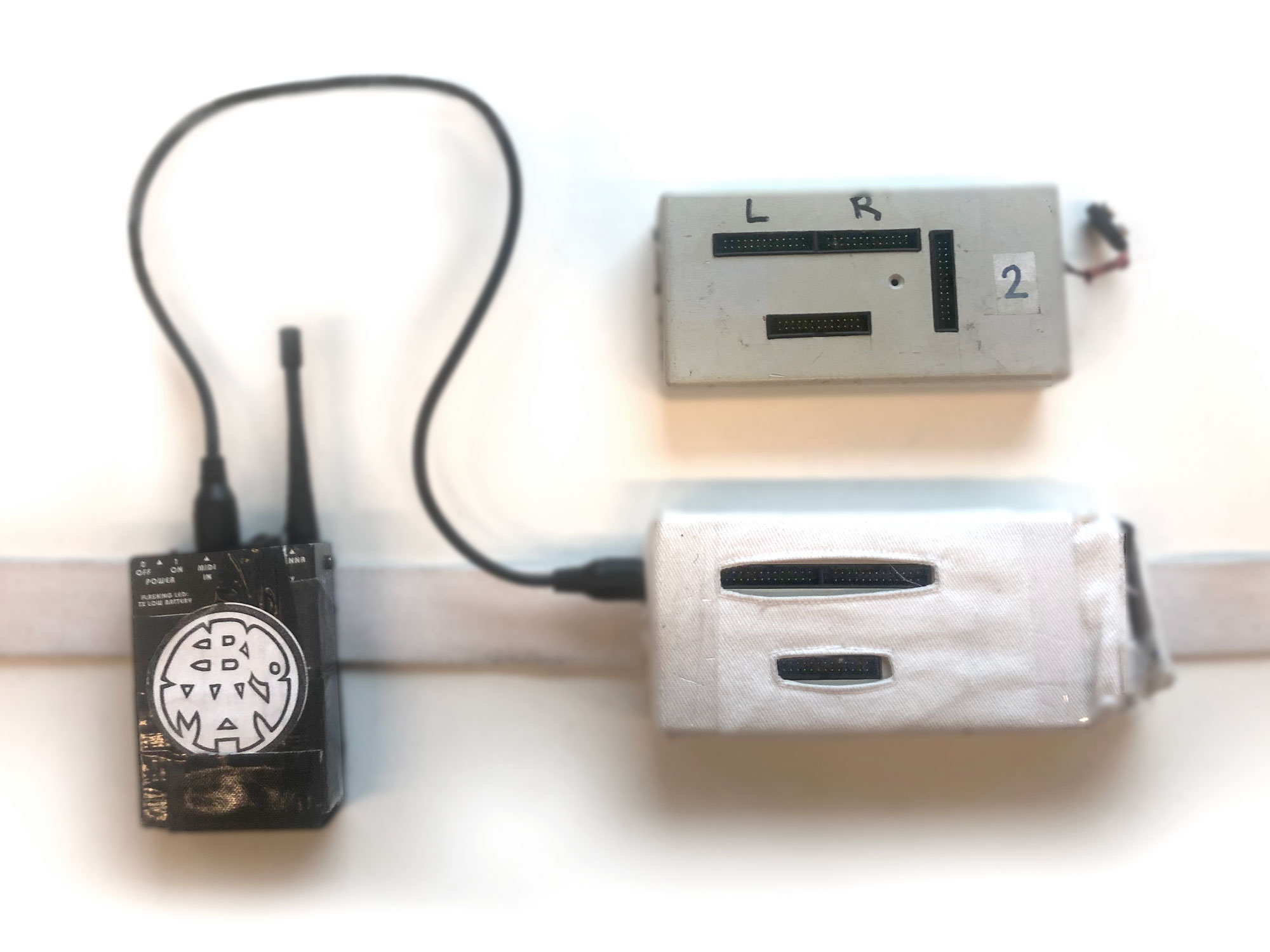

With Chris Heijens I designed a new small computer to convert the sensor data to MIDI. The computer cleans the data and maps the raw data to specific MIDI ranges. The computer is calibrated with a button on the arms, to make sure the SenSorSuit always outputs the same data.

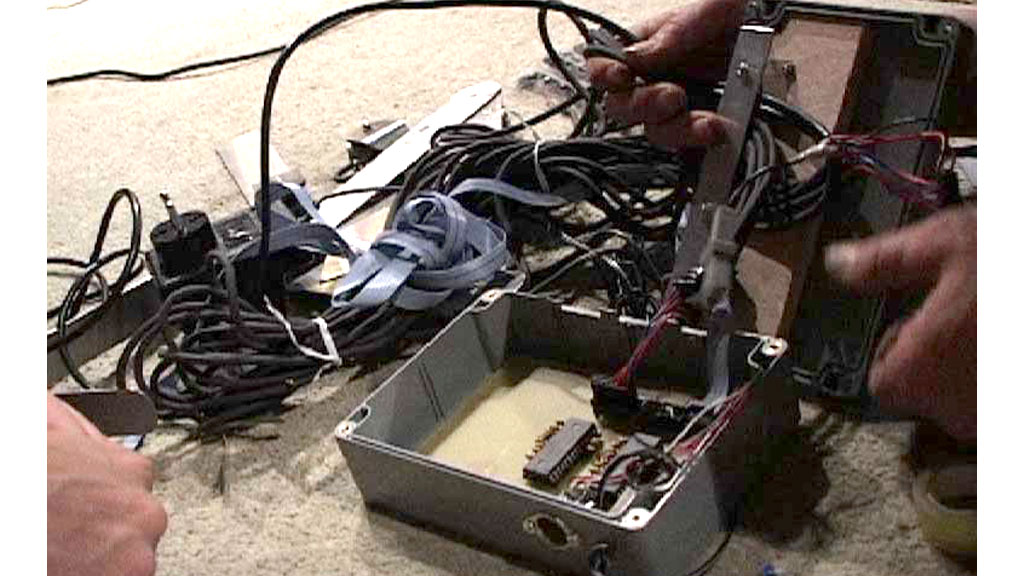

Chris produced the computer and fitted it in a small wireless box. This box is attached to a belt, together with a wireless MIDI transmitter. So the SenSorSuit can be used wirelessly. Very convenient.

Failed prototype

Before I found the flex sensors, I briefly experimented with improved metal sensors. These sensors were similar to the door hinges of the original SenSorSuit. This experiment failed, because these sensors were too rigid and uncomfortable to wear.

Performances

I used this SenSorSuit for many performances. I had a lot of success with the live video sampling shows. In these shows I used live recordings of the audience to ceate tracks live. The SenSorSuit made it easy to approach the crowd and interact with them, while still being able to adjust the live recorded samples to make them fit into the track and play with them.

Augmented Stage

In 2011, I developed Augmented Stage for my live shows. I noticed that people can get distracted by the suit and that makes them pay less attention to the visuals. They are trying to figure out what it is that I am doing. With Augmented stage I control the show using a 3D camera. I literaly step into the screen and interact with the visuals there. Another advantage is that I don’t need to wear anything to control the show. Anybody who steps in front of the camera can interact. That opens up many new creative possibilities and applications.

The SenSorSuit is a much better tool for direct video contol though, without any latency, to improvise like a real instrument. So the SenSorSuit is still one of my favorite interfaces to control audio-visuals.

Team

Produced and developed by:

- Harry Hofs

- Jeroen Hofs

- Chris Heijens

- Mattijs Kneppers